Knowledge gained from Projects

CATcher:

MarkBind:

RepoSense:

TEAMMATES:

CATcher

ISAAC NG JUN JIE

Angular

Angular components are split into three parts, *.component.ts, *.component.html and *.component.css

*.component.ts

@Component({

selector: 'app-auth',

templateUrl: './auth.component.html',

styleUrls: ['./auth.component.css']

})

This segment is found at the top of the *.component.ts files.

selectorindicates the keyword that will be used in*.component.htmlfiles to identify this component. For example,<app-auth> </app-auth>templateUrlindicates the filepath to the*.component.htmlfile.styleUrlsindicates the filepath(s) to the*.component.cssfile(s).

*.component.html

This is the template file. Template files use mostly HTML syntax, with a bit of angular specific syntax included. This includes the structural directives such as *ngIf, *ngFor, etc. The documentation is quite sufficient for understanding the angular syntax.

*.component.css

This is a stylesheet, using normal css. There is a ::ng-deep selector available, which promotes a component style to global style.

Arcsecond

Arcsecond is a string parsing library for javascript. An example arcsecond parser is as follows:

export const TutorModerationTodoParser = coroutine(function* () {

yield str(TODO_HEADER);

yield whitespace;

const tutorResponses = yield many1(ModerationSectionParser);

const result: TutorModerationTodoParseResult = {

disputesToResolve: tutorResponses

};

return result;

});

str(TODO_HEADER)matches the starting of the string withTODO_HEADER.whitespacematches the next part of the string with one or more whitespaces.many1(ModerationSectionParser)applies theModerationSectionParserone or more times.

GraphQL

GraphQL is a architecture for building APIs like REST. Unlike REST where the server defines the structure of the response, in GraphQL, the client and request the exact data they need.

Node 14.x support on macos

Apple laptops changed to using ARM64 architecture back in 2020. This meant that Node versions released before then were not directly supported by the ARM64 architecture. This caused issues with the github actions. There is a workaround for this by running arch -x86_64 and manually installing node instead of using the setup-node Github action, but the simpler solution was to upgrade the test to use Node version 16.x.

Playwright testing

Tests the application by hosting it on a browser then interacting with html components and checking for expected behaviour. You can use the Playwright extension for chrome and the extension for visual studio code to generate tests and selectors. ...

KOO YU CONG

NPM (Node Package Manager)

Managing packages

Issue faced: I faced an issue when setting up the project locally that was related to a default NPM package used by Node to build and compile native modules written in C++. I had to dig deep into how packages were being installed and managed by NPM to resolve the issue and have documented my findings as follows:

Knowledge gained:

- NPM is the default package manager for

Node.js, helping developers to install and manage packages (i.e. libraries/dependencies) viapackage.json - NPM hosts a collection of open-source packages at their online registry, where we can then install these packages to be used in our project

- Packages can be installed globally (i.e. system wide) or locally (i.e. per project, under the

node_modules/folder) - Installation of packages is essentially pulling the source code of the package from NPM online registry, and will be ran locally when required. Hence it is possible (but certainly not recommended) to modify the pulled source code locally, as mentioned in one of the workarounds to this issue

Reference: https://docs.npmjs.com/packages-and-modules

Custom script definitions

Issue faced:

I realised that we were using a npm command that I was very unfamiliar with, that is npm run ng:serve:web, and I wondered what this command meant

Knowledge gained:

- When running commands in the form of

npm run <command>, e.g.npm run ng:serve:web, these commands are actually self-defined scripts under thepackage.jsonfile. - These are different from built-in npm commands, e.g.

npm build

Reference: https://docs.npmjs.com/cli/v9/commands/npm-run-script

NVM (Node Version Manager)

Issue faced: CATcher uses Node 16 while WATcher uses Node 14 to build, it was hard to switch between node versions quickly when working on both projects

Knowledge gained: We can use NVM to easily manage and switch between different node versions locally

Angular

Components and Modules

A typical component in Angular consists of 3 files:

- A html file that defines the layout of the component

- A css file that provides styling to the UI

- A typescript file that controls the logic and behaviour of the application, typically handles the data of the application too

Each component can have a module file where we can state the components or modules that this component is dependent on (i.e. the imports array) and the components that is provided by this module (i.e. the declarations array). This helps increasing the modularity and scalability of the whole application.

As a developer coming from React, here are some clear differences I have observed:

- There is no concept of states in Angular and the data passing is 2-ways, when the user updates from the UI, the value is automatically updated in the component and vice versa, whereas in React we would have to use states and explicitly update the states via setState or similar functions.

- Instead of defining the layout of componenet and logic in the same file, Angular split them into 2 seperate files (i.e. the html and typescript file), personally I felt that this split helps enforce the MVC architecture more strictly, but also imposes more restrictions when it comes to components that have tightly coupled logic

Reference: Angular Component Overview

Component Lifecycle

Angular components has a lifecycle that goes from Initialization --> Change detection --> Destruction. Our application can use lifecycle hooks methods to tap in on the key events of the lifecycle of a component, this is crucial for the fact that we do not have states like we do in React, and we would often want to perform certain operations during key changes to the component.

This knowledge was crucial to understanding and fixing an existing CATcher bug (PR), where the bug could be fixed by tapping on key changes to the issue model used in the component.

Reference: Angular Component Lifecycle

CATcher

IssueTablesComponent and how issues are being shown in tables

While working on issue #1309, I had to delve deep into how the the IssueTablesComponent is implemented in order to create new tables. A few meaningful observations learnt is summarised as follows:

- The issues displayed in the table is mainly dependent on 2 things,

- The base issues data provided by

IssueService, which is initialized based onIssuesFilter, and will periodically pull the issues from github - The

filterswe inject when creating theIssueTablesComponent, where the base issues can be filtered down to the issues that we are concerned of - The action buttons and its respective functionalities are pre-defined in the

IssueTableComponentitself, we only specify the action buttons that we want when creating theIssuesTablesComponentthrough theactionsinput.

- The base issues data provided by

Github Workflows/Actions

How github workflows/actions are being triggered

Issue faced:

While working on the new phase (i.e. bug-trimming phase) for CATcher, the team decided to

use a feature-bug-trimming branch as the target branch we all merge into. However, I noticed that when we created PRs / merged PRs to that feature branch (see this issue for more details), there are no github workflows/actions being run. This puts us at the risk of failing tests without knowing, I spent some time to learn how github workflows/actions are being triggered.

Knowledge gained:

- The potential trigger points for workflows are defined under the

on:section within the workflow file (i.e..ymlfile) - We can automatically trigger the workflows when we

pushorpull-requestto certain branches that are included:

on:

# Automatically triggers this workflow when there is a push (i.e. new commit) on any of the included branches

push:

branches: [sample-branch1, sample-branch2]

# Similar to push:, but for PRs towards the included branches

pull_request:

branches: [sample-branch1]

- We can also define an manual trigger point using the

workflow_dispatchkeyword:

on:

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

Github APIs

REST vs GraphQL

Issue faced: As both CATcher and WATcher involves heavy interaction with the GitHub API(i.e. GitHub acts like our database), I often ran into issues related to the models that we retrieve from the Github API:

- Problematic RestGithubIssueState value #1310

- Redundant fetching of GitHub issues: Duplicated REST & GraphQL API Calls #1313

Knowledge gained:

- REST API

- Designed for standard HTTP requests and has fixed endpoints, hence

- responses usually have more data than needed by the users, and

- we might need multiple requests and aggregate data in the application logic to form the final results

- GraphQL API

- Designed as a query language, and can specify exactly what we need in a single request, hence

- we can prevent over-fetching or under-fetching

- GitHub adopted both API standards and provides endpoints to both these standards, allowing clients the flexibility to decide on their own what standard they want to use

- We started off the project with REST APIs, and later on decided to move towards GraphQL APIs as the customized queries fit our use case more (since GitHub is like a database to us). However, in order to prevent making breaking changes to the existing API calls, we decided to not change most of the existing REST API calls that were already implemented.

Reference:

Project management

Branch management strategies

Issue faced:

While working on WATcher features, there were team members working on bug fixes that are supposed to be deployed

in the next version, as well as team members working on new features that are supposed to be deployed in future versions. The work being done for future versions could not be merged to main branch as they are not supposed

to be deployed in the next version.

Knowledge gained:

We explored multiple possible strategies (discussed in this issue thread)

- We agreed that such scenarios where different team members are working on different future versions are very unlikely, and it wouldn't really make sense to have a branching workflow specifically for such scenarios

- Hence, we are proceeding with the original workflow, where each new feature will have a feature branch and will be merged to the

mainbranch once completed

Release management strategies

Issue faced:

We noticed that CATcher and WATcher were using different release management/deployment strategies, the differences are stated in this issue.

Knowledge gained:

We explored multiple possible release management and deployment strategies, and concluded that the automated workflow used in WATcher is not necessary more streamlined, as it would require additional PR to a deploy branch in order to trigger the deployment. In cases where we need to have hotfixes / cherry-pick certain releases, it would be even more troublesome, as we would need to create a seperate branch to include the hotfixes, then PR that branch to the deploy branch to trigger the deployment.

Hence, we standardized the deployment workflow to be manually triggered from github actions, and it would target a specific branch to be deployed. This strategy is more convenient as we would be able to directly deploy from main when we decide to create a new release, and when there are further changes/hotfixes required, we could simply branch out from that specific commit that were deployed (we use tagging so that these commits can be easily found) and apply the hotfixes, then deploying from that branch directly. Notice that this strategy is actually similar to the release branching workflow, but in the sense where we don't create a branch for the first release (we simply tag the commit on main), but if hotfixes are needed, we would then be in the same workflow as release branching

LOH ZE QING, NORBERT

ngx-markdown

I learned about the ngx-markdown library while I was working on a fix to preserve whitespace when converting Markdown to HTML. ngx-markdown combines multiple different language parsers and renders them in one library. ngx-markdown supports Marked, Prism.js, Emoji-Toolkit, KaTeX, Mermaid, and Clipboard.js. I learned about configuring the options for the Markdown HTML element.

Marked

Marked is the main parser we use for our comment editor in creating/editing issues and responses. I learned that any text that we write in Markdown syntax is converted into HTML elements using Marked. I found out that we can actually override how Marked generates the HTML elements, and we can add more attributes like classes, styles, and even modify the text before rendering it.

nvm-windows

WATcher requires node 14 in order to npm install some of its dependencies. However, instead of having to install and reinstall a different node version between different projects, I can use nvm-windows to install multiple node versions and switch between them. However, the latest version of nvm-windows has some issues if youwant to install node 14. After some debugging, I found out that nvm-windows v1.1.11 can install node 14 with no issues.

CATcher phase management

While working on creating a new phase, I learnt a lot about how phases are managed in CATcher. Every phase has its own phase permissions and phase routing. Phase permissions controls certain tasks. For example, creating a new issue, deleting an issue, editing an issue is only allowed at certain phases. Every phase also has its own routing which is used to load the different pages ranging from, viewing to editing. I also learnt that the repos to hold the issues are generated only at the bug reporting phase.

Git commit hooks

While I was working on a PR, I was wondering why certain parts of the code are modified after pushing a commit. I then found out that there are commit hooks in place to fix and format and lint issues. Source tree actually allows users to bypass the commit hooks if the changes are irrelevant to the PR that the user is working on.

Github search query

While working on implementing the feature 'View on github' for WATcher where a user will be able to see the current dashboard in github, I learnt that github searches can actually be done using URL queries.

URL encoding

While working with URL queries, I learnt that some characters are not allowed in URLs. Such characters are "!"$$()" etc. In order to use them, they must be encoded into UTF-8. More information can be found here.

GraphQL

While I was exploring a new feature, I realised that there is no dedicated sandbox for testing the API/Queries. This made it hard for me to understand how the queries work and what the queries response look like. It was very troublesome to have to look at the network tab and look at the response.

I also learnt about the difference GraphQL features like schema and fragments which are important for creating reusable and easily maintable queries.

I also learnt how WATcher uses pagination to perform queries to GitHub using cursor.

Postman

Following the exploration of GraphQL, I found that some of my teammates were trying to implement new features that required data from GitHub. However, they were struggling with understanding the GraphQL queries due to the lack of visualization. This has prompted me to create a sandbox for testing the GraphQL queries.

I discovered how to create reusable queries in Postman using collection variables such that anyone can fork the collection and start working on it without having to set up anything other than authorization.

I also learnt how to create environments for workspaces such that sensitive data such as secret keys will not be shared to public.

SOH ZHENG YANG, MARCUS

Tool/Technology 1

Angular

Angular is the main tool used in both CATcher and WATcher. It is based on TypeScript.

Angular is a component-based framework. Each component is generated with:

- *.component.ts

- *.component.html

- *.component.css

Component state is maintained in the .ts file. These state variables can be bound to HTML elements through use of curly braces .

Angular offers directives such as ngIf, ngFor that allow us to "use" JS in the HTML files.

Services are used for processing, for tasks that don't involve what the user sees. This is different from the .component file, which directly handles things the users see. Services are kept in a separate directory /services/*.

Angular services can be "injected" into other services. This is done in the constructor. Once injected, the service can access of any the injected service methods. But, it's important to not design the code in such a way that it causes a circular dependency. This was something I faced when implementing the presets, as the preset service relied on the filter service but the filter service also relied on the preset service. To fix it, we can redesign the code such that it doesn't have this circular dependency or we can extract out the parts into a 3rd service that is then injected into both.

Models in Angular can be used to enforce the type of data. This can also make it easier to separate and isolate related methods, such as putting preset-related functions in the Preset model.

Angular also has lifecycle hooks ngOnInit(), ngOnDestroy(). These setup and cleanup functions function quite similarly to React's useEffect, and are primarily used (among other things) to setup and clean up listeners, to prevent memory leaks

Tool/Technology 2

RxJS

RxJS is the core component required for reactivity in Angular applications. It exposes the idea of "observables", and when the state of that observable changes, it notifies any listeners attached to it.

Observables can be subscribed to and de-subscribed to at any time, using the .subscribe function. It is common practice to dennote observables as variables with

a trailing "

An observable is somewhat similar to a stream. We can register "stream processing functions" such as map, filter.

Tool/Technology 3

Material Angular

Material Angular is the design library used by CATcher and WATcher. Unfortunately, it is currently using version 11, when the latest version is 19. Despite this, most of the API is similar.

Material Angular allows us to use pre-made components that follow the Material design style, allowing us to have a consistent and coherent UI experience.

Material Angular provides advanced customization through the ::ng-deep CSS selector. Normally, any CSS written in the .css file will not apply to the generated Angular Material HTML components, however, by using ::ng-deep, it can be.

However, it's important to note that this is a deprecated in future versions.

Tool/Technology 3

Github API

GraphQL API Queries to Github are used extensively in WATcher. As a result, it is not uncommon that rate limits will be hit when opening a large repository. The rate limit of Github is 5k "points" per user per hour, and upon hitting this limit, we are locked out of the GraphQL API (but NOT normal Github API!) for 1 hour.

...

TNG WEN XI

Angular

CATcher and WATcher are both built using the Angular framework, which is a single-page web appliation framework. Angular comes with a CLI tool to accelerate development.

Components

- Components are the fundamental building blocks of Angular applications.

- Generating a component will create a TypeScript file, a HTML file, a CSS file, and a test file.

- The TypeScript class defines the interaction of the HTML template and the rendered DOM structure, while the style sheet describes its appearance.

- The

@Componentdecorator in the .ts file identifies the class immediately below it as a component class, and specifies its metadata. It associates a template with the component by referencing the .html file (or with inline code). - Template syntax

- A template contains regular html as well as Angular template syntax, which alters the HTML based on the application's logic and the state of application and DOM data.

- Templates can use:

- Data binding

- Pipes

- Directives

Services

Dependency injection (DI) is a design pattern for creating and delivering some parts of an application to other parts of an application that requires them. In the DI system, there are two main roles: dependency consumer and dependency provider.

In Angular, dependencies are typically services. When a service is provided at the root level, it becomes a singleton and and all classes will share the same instance of the service. This allows different classes (components, services, etc.) to inject the service and share information through it.

GraphQL

- CATcher and WATcher use GraphQL to fetch and update issues, PRs, and comments from GitHub.

- GraphQL is a query language, which is a specific syntax used to query a server to request or mutate data.

Drawbacks to using a traditional REST API:

- Overfetching

- Getting back more data than needed

- Underfetching

- Getting back less data than needed

- Need to make multiple requests to different end points

GraphQL API is resolved into its schema and resolvers:

- Schema describes how the API will work

- Every schema has two required types: the query and the mutation type

- Query: for fetching and reading data

- Mutation: For creating, updating, or deleting data from API

GraphQL allows users to manually choose which fields they want to fetch from the API

In the case of WATcher, using GraphQL means that additional fields can be fetched easily simply by adding new fields to the query and changing the Angular model for the issue / PR. For instance, when working on displaying reviewers in the PR cards in WATcher, I only had to add to the FetchPullRequests query and edit the respective Angular models to include the newly fetched data.

Rate limits

GitHub's GraphQL API has limitations in place to protect against excessive or abusive calls to GitHub's servers. While working on WATcher, we noticed that opening a large repository such as NUSMods will cause the rate limit to be exceeded very quickly, and this could be a potential problem for users who want to use WATcher for large repositories.

Primary rate limit

Users have a limit of 5000 points per hour per user, where the point value of a query can be calculated as specified in their docs.

Secondary rate limit

GitHub also enforces secondary rate limits to prevent abuse of the API. GitHub does not allow too many concurrent requests, and no more than 2,000 points per minute are allowed for the GraphQL API endpoint.

MarkBind

ADRIAN LEONARDO LIANG

Tech Stack

VueJS

VueJS is a JavaScript framework for building user interfaces, similar to React. It offers reactive data binding and a component-based architecture, allowing developers to create reusable components that allow for parent-child relationships. Vue is used extensively in MarkBind to create and render website components, such as pages, boxes, code blocks, etc.

<script>

Vue components often have a <script> block which defines the logic for the component. It involves sections like:

props- Defines data received from a parent componentdata()- Returns an object containing local reactive state variablescomputed- Defines derived properties that automatically update whenever dependencies changemethods- Functions that you can call within your template or events- Lifecycle hooks (

created,mounted, etc.) - Functions Vue calls automatically at specific points in the component's lifecycle

Template Refs

Vue provides a way to directly access underlying DOM elements using the ref attribute. Using either useTemplateRef or this.$refs, we can obtain the reference and directly manipulate the DOM element. However, this ref access should only be done after the component is mounted. Before it is mounted, it's possible for either methods to return null or undefined, which may lead to issues down the line.

One example of this was in the Tab and TabGroup of MarkBind. this.$refs.header was accessed within the computed property, which could be evaluated before the component mounts. This lead to an issue with Tabs not rendering. To fix this, the header reference should only be accessed after the component has mounted, such as under the mounted() lifecycle hook.

Resources:

- VueJS Tutorial - Official tutorial for VueJS

- VueJS Guide - Official VueJS Guide

- Template Refs - Vue3 Docs for Template Refs

TypeScript

TypeScript is a programming language that builds upon JavaScript by adding static typing, enabling developers to catch errors at compile time and write more maintainable code as compared to JavaScript. MarkBind uses TypeScript primarily in its core package.

MarkBind also has documentation describing the migration process from JavaScript to TypeScript. The migration PR should be structed as two commits, a "Rename" commit and an "Adapt" commit.

- The "Rename" commit is a commit specifically for renaming files from having the

.jsextension to having the.tsextension. Git should regard the changes of this commit as arename. - The "Adapt" commit is to adapt the files fully to TypeScript. This means fixing things like changing syntax to match TypeScript's syntax or adding types as necessary.

This two-step process for the migration helps to ensure Git views these changes as a renaming and adaption of the same file, rather than a deletion and creation. This helps with the review process on GitHub, as the diffs are shown side-by-side instead of entirely different files. For example, see the commit history in this PR. It also ensures that the history of a .ts file can still be traced back to its .js version, preserving valuable information for future developers.

TypeScript uses a configuration file tsconfig.json to define how the TypeScript compile tsc should compile .ts files into JavaScript. It is used primarily in MarkBind to exclude the compilation of node_module files and .test.ts files.

Resources:

- TypeScript Handbook - Official documentation for TypeScript

- TypeScript Migration - MarkBind's documentation for TypeScript migration

Jest

Jest is a JavaScript testing framework that can be used for any JavaScript project. It runs tests defined in .test.js files using a built-in test runner. Jest provides helpful functions like describe, test and expect, along with matchers like toEqual and toThrowError to define and assert test behavior.

In MarkBind, Jest is used extensively to test JavaScript and TypeScript files. It is primarily used for unit testing components and utility functions. These tests help prevent regressions and ensure code reliability as features evolve or are added to the codebase.

It supports setup and teardown of any preparation needed for the tests, using beforeEach, beforeAll, afterEach and afterAll. This is especially useful when some setup is needed to ensure that tests are independent of each other and that they are tested under the same conditions every time. An example of this can be seen in Site.test.ts, where before each test enviroment variables are deleted to avoid affecting the tests themselves.

It also supports function mocking, allowing developers to simulate and monitor function behavior — such as the number of calls and the arguments used — which is especially useful for unit testing. This can be found in NodeProcessor.test.ts, where Jest is used to mock the logger.warn function in order to track that certain messages are properly logged to the console for users.

Jest normally works by default but it is also possible to configure it using the jest.config.js file. There are many options you can configure, like verbose which reports every individual test during the run and testMatch which can be used to specify which test files to run, rather than running all .js, .jsx, .ts and .tsx files inside of __tests__ folders. testMatch was used this semester to ensure only the TypeScript version of tests are ran, preventing duplicated testing from running both .ts and .js files.

Resources:

- Jest Docs - Official Jest Documentation

- Jest Config Docs - Official Documentation on Jest Config

ESLint

ESLint is a JavaScript and TypeScript linter that statically analyzes code to find and fix problems, enforcing consistent coding styles and catching potential bugs before runtime. It parses the code and applies a set of rules to detect issues such as syntax errors, stylistic inconsistencies and potential bugs. These rules can be configured in .eslintrc.js by extending presets like airbnb-base or by specifying individual rules like "func-names": "off".

MarkBind uses ESLint to maintain code quality and consistency across the codebase. It helps enforce coding standards among the developers in both JavaScript and TypeScript files. ESLint is run not only during development but also during testing and CI. MarkBind has configured .eslintrc.js to match its desired coding standard, such as enforcing a 2-space indentation.

Resources

- ESLint Docs - Official ESLint Documentation

- MarkBind's ESLint Config - MarkBind's

.eslintrc.jsFile

GitHub Actions

GitHub Actions are a set of tools that allow developers to automate certain workfloaws and add them to their repositories. One of its main uses in MarkBind is to implemenet a Continuous Integration (CI) pipeline. PRs and commits are automatically built and tested to ensure the code stays functional, verifying the integrity of MarkBind's master branch.

The workflows are defined in .yml files located in the .github/workflows directory. Each .yml file describes:

- Triggers: When the workflow should run (e.g. on push, pull requests, etc.)

- Jobs: A set of tasks to do, such as installing dependencies, running tests or uploading to CodeCov

- Steps: The individual commands within each job (e.g.

npm install,npm run setup,npm run testetc.)

CodeCov

CodeCov is a code coverage reporting tool that helps developers understand how much of their code is tested and accounted for by automated tests. It is able to visualize which lines of code are covered and which aren't. CodeCov can be a part of the CI pipeline, where the test coverage is uploaded after all tests have passed.

MarkBind uses CodeCov to generate the code coverage reports within PRs. The code coverage report can then be used during the review process to determine if any drop in code coverage is acceptable or should be minimized.

Code Coverage Calculations:

One thing I learnt about CodeCov was how code coverage is calculated and how indirect changes affect it:

- Code coverage is calculated only for files that have at least one test. That means that files with no tests at all are excluded from the coverage percentage, even if they're 0% tested. If a test is later added to such a file, that file then becomes part of the coverage calculation.

- Indirect changes occur when testing one file brings in other previously untested files. For example, if an untested file (File A) depends on other untested files (Files B, C), adding a test to File A will also pull File B and C into the coverage calculaton, even if they remain untested.

This behaviour explains the drop in coverage in this commit. Tests were added to serve.js, which was originally untested. This lead serve.js to be included in the coverage calculations. However, since serve.js depends on other untested files like logger.js, live-server/index.js and serveUtil.js, those files were also included indirectly in the calculation. Because those additional files were also untested, the overall coverage percentage dropped, causing the commit to fail the CI coverage check.

Upload Tokens:

Another issue with CodeCov was its use of global upload tokens to upload coverage reports. These tokens were limited, meaning that during periods of high traffic, PRs would not reliably generate coverage reports (see discussion issue #2658). This would hinder the review process and was also the reason why the drop in coverage in the previous example was only noticed after the PR had already been approved.

We resolved this by migrating from CodeCov v3 to v5. In v5, the release notes stated that it was now possible to upload reports without an upload token, provided certain conditions were met. One of these conditions was that our repository was public and the reports had to come from an unprotected branch.

Since MarkBind follows a fork-and-PR workflow, these PRs from forks are considered as unprotected branches. This allowed us to take advantage of the new tokenless upload feature, making coverage uploads far more reliable during CI runs.

Resources:

- CodeCov Docs - Official CodeCov Documentation

- CodeCov v5 - CodeCov v5.0.0 Release Notes

- Forking Workflow - MarkBind's Forking Workflow Guide

RegEx

Regular Expressions (RegEx) are a sequence of characters used to match a patterns in text. They can range from simple exact-word matches to complex patterns using special characters.

RegEx is typically used in MarkBind to validate user inputs andc heck for discrepancies. Some examples include:

- The Highlighter component, to match the highlighter rules

- Within the

servecommand, to detect IP zero addresses and check the validity of IP addresses

Resources:

- RegEx Cheatsheet - A reference for RegEx constructs

- RegEx101 - An interactive tool for testing RegEx patterns

MarkBind

MarkBind Highlighter Component

In MarkBind, users can specify highlighter rules following the syntax in our User Guide. MarkBind then highlights the code block appropriately when rendering the site.

Implementation Details

Markbind's core package processes highlighter rules in the following steps:

- Parsing Highlighter Rules

- Uses RegEx in

core/src/lib/markdown-it/highlight/HighlightRuleComponent.tsto match the syntax for rules such as character or word-bounded highlights.

- Uses RegEx in

- Calculating Character Bounds

- Utilizes

computeCharBounds()to adjust user-specified bounds, ensuring they are valid. - Handles unbounded values and adjusts for indentation level so that bounds are relative to indentation level.

- Clamps values to ensure they stay within the valid range.

- Utilizes

- Wrapping Highlighted Content

- Wraps text content within

<span>elements (highlightedorhl-data) to apply the necessary highlighting when rendered.

- Wraps text content within

Absolute Character Position Highlighting

Problem

Previously, the highlighter could not highlight indentation since it automatically adjusted for it during character bound calculation.

Solution

I introduced a feature allowing users to specify absolute character positions rather than relative positions.

- Users can prefix their character bounds with

+to indicate absolute positioning. - The RegEx parser was updated to accomodate this syntax.

computeCharBounds()was modified to skip indentation length adjustments if absolute bounds were detected.

Edge Case: Handling Tab Characters (\t)

An issue arose when using absolute bounds with tab characters. Since \t was considered a single character but visually occupied more space, the highlighting results were inconsistent. To resolve this:

- I implemented an automatic conversion of tab charactes to four spaces using

code.replace(/\t/g, ' '). - This ensured consistent highlighting behavior regardless of tab spacing.

MarkBind CLI commands

MarkBind provides several Command Line Interface (CLI) commands, such as init, serve and build. Details can be found in the User Guide.

Implementation Details

MarkBind's CLI functionality lies within the cli package. It uses the commander library to create and configure its CLI commands. The library allows developers to customie the commands, such as their aliases, options, descriptions and action. The user's specified root and the options are then passed on to the corresponding action function.

MarkBind's serve command

MarkBind's serve command allows users to preview their site live. It follows these steps:

- Receiving the CLI command

- The

commanderlibrary processes theservecommand, along with user-specified options. - These values are passed to the

serve()function incli\src\cmd\serve.js.

- The

- Building the Site

- The

serve()function performs preprocessing to verify that the user-specified root contains a valid MarkBind site. If not, an error is thrown and execution stops. - A

serverConfigobject is created and passed to theSiteinstance before being used to configureliveServer.start().

- The

- Starting the Web Server

- The server is started using

cli\src\lib\live-server\index.js, which is a custom patch of thelive-serverlibrary. live-serveruses Node.js'shttpmodule to start the web server.- It listens for the

errorevent, handling errors such asEADDRINUSE(port already in use) andEADDRNOTAVAIL(address not available). - It also listens for the

listeningevent, indicating that the server is ready so the site URL can be opened.

- The server is started using

- Opening the Live Preview

- The

opnlibrary is used to automatically open the preview URL. live-serverlistens for file system events likeaddorchangeto trigger areloadevent, updating the preview in real-time.

- The

Issues with live-server Patch

MarkBind's live-server patch had some issues, particularly with IPv6 addresses:

Invalid IPv6 URLs

When an IPv6 address is supplied by the user, the opened URL is invalid. IPv6 URLs require square brackets[], e.g., the address::1should be opened with a URL likehttp://[::1]:8080instead ofhttp://::1:8080. As a side note,localhostresolves to::1.Incorrect Open URL for EADDRNOTAVAIL

When this error occurs (indicating the specified address is unavailable), the patch retries using127.0.0.1. However, the opened URL still referenced the original unavailable address, causing confusion for users.Missing Warning for IPv6 Broadcast Address

serve.jsissues a warning when users specified0.0.0.0(IPv4 broadcast address), but the equivalent warning was missing for IPv6 addresses like::.

Resources

- 'commander' Package - Official

commandernpm page live-serverPackage - Officiallive-servernpm page

Project Management

MarkBind has documentation for Project Management with topics ranging from Managing PRs, Doing a Release, Acknowledging Contributors and Managing the Repository. This information will be useful when working on MarkBind as a senior developer for CS3282.

Managing PRs

MarkBind follows the general guidelines stated under Working with PRs @SE-EDU. In particular, the way we phrase our PR review comments is important for a good discussion.

PR Approvals

MarkBind follows the rule that PRs should normally need two approvals before merging. This is to allow other developers to review the PR before hastily merging. If the PR is simple enough, then there's no need to wait for another approval.

As a side note, it's important to check for certain things in a PR. For example, PRs should not make any unnecessary changes in the codebase when solving an issue. It's also good to make use of the report generated by the CI pipeline, such as where all test cases pass and the coverage report generated by CodeCov. If any drop in coverage is detected, the reviewer will need to consider whether such a drop is too severe or could be avoided and addressed by the PR author.

Resources

- Project Management - MarkBind's documentation for Project Management

CHAN GER TECK

List the aspects you learned, and the resources you used to learn them, and a brief summary of each resource.

Internal Tools/Technology

How MarkBind Works (Overview of everything)

In order to make more well informed changes and tackle deeper issues, I decided to cover the whole codebase of Markbind just so I could have a much fuller understanding of how different parts worked together.

While doing so, I used a MarkBind site to document the architecture and different packages and classes in the MarkBind codebase. The site can be viewed here: https://gerteck.github.io/mb-architecture/

Markbind's Search Utility

How Native MarkBind Search works

Collection of Title and headings in generation:

- We trace the website generation in

Site/index.ts. - When building source files, during the page generation process,

Page.collectHeadingsAndKeywordsrecords headings and keywords inside rendered page into this.headings and this.keywords respectively. - When writing site data, the title, headings, keywords are collected into pages object.

Page Generation and Vue Initialization

- In

core-web/src/index.js, thesetupWithSearch()updates the SearchData by collecting the pages from the site data.setupWithSearch()is added as a script in the file templatepage.njkused to render the HTML structure of Markbind pages.- This file template is used during the page generation process.

- Note also that

VueCommonAppFactory.jsprovides a factory function (appFactory) to set up the common data and methods for Vue application shared between server-side and client-side, and provides the common data properties and methods.- In particular,

searchData[]andsearchCallback(), which are relevant in the following portion. - When using

<searchbar/>, this is where to use MarkBind's search functionality, we set the appropriate values:<searchbar :data="searchData" :on-hit="searchCallback"></searchbar>

- In particular,

Vue Components: Searchbar/SearchbarPageItem.vue Searchbar.vue

- The searchbar uses the

searchData[]indata, filters and ranks the data based on keyword matches and populates the dropdown withsearchbarPageItems. - It calls the

on-hitfunction (whichsearchCallbackis passed into) when a search result is selected. - Presentation wise, each search result is represented by a

searchbar-pageitemvue component.

SearchbarPageItem.vue

- Presents the component conditionally based on whether item is a heading or a page title.

How the new Markbind PageFind Plugin Works

About PageFind: A fully static search library that aims to perform well on large sites, while using as little of users bandwidth as possible, and without hosting any infrastructure.

Documentation:

Integration of Pagefind into MarkBind

It runs after the website framework, and only requires the folder containing the built static files of the website. A short explanation of how it works would be:

- PageFind indexes the static files

- If pagefind is included as a plugin, we indexSites with PageFind, which writes the index files _site/pagefind

- Plugin exposes a pagefind JS API for searching

- Alternatively, use pagefind default UI for searching. This is done by processes containers with

id="pagefind-search-input", and initialing a default PageFindUI instance on it, not unlike how algolia search works.

- Alternatively, use pagefind default UI for searching. This is done by processes containers with

- This JS API is used by a custom Vue component searchbar.

External Tools/Technology

Vue

Vue 2 to Vue 3

I got the chance to experience this firsthand.

- Through the process (ongoing), it has also allowed me to uncover a significant number of bugs in MarkBind.

https://v3-migration.vuejs.org/migration-build

MarkBind (v5.5.3) is currently using Vue 2. However, Vue 2 has reached EOL and limits the extensibility and maintainability of MarkBind, especially the vue-components package. (UI Library Package).

Vue 2 components can be authored in two different API styles: Option API and Composition API. Read the difference here It was interesting to read the difference between the two.

- The Option API organizes code into predefined options like data, methods, and computed, making it simpler and more beginner-friendly but less flexible for complex logic.

- In contrast, the Composition API uses a setup() function and reactive utilities like ref and reactive, allowing logic to be grouped by feature for better modularity and reusability. While the Option API relies on mixins for reuse, which can lead to conflicts, the Composition API enables cleaner and more scalable code through composable functions.

- Additionally, the Composition API offers superior TypeScript support and is better suited for large, complex applications, though it has a steeper learning curve compared to the straightforward Option API.

Server-side rendering: the migration build can be used for SSR, but migrating a custom SSR setup is much more involved. The general idea is replacing vue-server-renderer with @vue/server-renderer. Vue 3 no longer provides a bundle renderer and it is recommended to use Vue 3 SSR with Vite. If you are using Nuxt.js, it is probably better to wait for Nuxt 3.

Currently, MarkBind Vue components are authored in the Options API style. If migrated to Vue 3, we can continue to use this API style.

Vue 2 to Vue 3 (Biggest Shift)

Vue 2 In Vue 2, global configuration is shared across all root instances as concept of "app" not formalized. All Vue instances in the app used the same global configuration, and this could lead to unexpected behaviors if different parts of the application needed different configurations or global directives.

E.g. global API in Vue 2, like Vue.component() or Vue.directive(), directly mutated the global Vue instance.

Some of MarkBind's plugins depend on this specific property of Vue 2 (directives, in particular, which are registered after mounting).

However, the shift to Vue 3 took into consideration the lack of application boundaries and potential global pollution. Hence, Vue 3 takes a different approach that takes a bit of effort to migrate.

Vue 3

In Vue 3, the introduction of the app instance via createApp() changes how global configurations, directives, and components are managed, offering more control and flexibility.

- The

createApp()method allows you to instantiate an "app," providing a boundary for the app's configuration -- Scoped Global Configuration: Instead of mutating the global Vue object, components, directives, or plugins are now registered on a specific app instance.

Also some particularities with using Vue 3:

Vue SFC (Single File Components)

Vue uses an HTML based template syntax. All Vue templates

<template/>are syntactically valid HTML tht can be parsed by browsers. Under the hood, Vue compiles the template into highly optimized JS code. Using reactivity, Vue figures out minimal number of components to re-render and apply minimal DOM manipulations.

SFC stands for Single File Components (*.vue files) and is a special file format thaat allows us to encapsulate the template, logic, styling of a Vue component in a single file.

All

*.vuefiles only consist of three parts,<template>where HTML content is,<script>for Vue code and<style>.SFC requires a build step, but it allows for pre-compiled templates without runtime compilation cost. SFC is a defining feature of Vue as a framework, and is the reccomended approach of using Vue for Static Site Generation and SPA. Needless to say, MarkBind uses Vue SFCs.

<style>tags inside SFCs are usually injected as native style tags during development to support hot updates, but for production can be extracted and merged into a single CSS file. (which is what Webpack does)

Vue Rendering Mechanism

Reference: https://vuejs.org/guide/extras/rendering-mechanism

Terms:

virtual DOM (VDOM)- concept where an ideal 'virtual' DOM representation of UI kept in memory, synced with the 'real' DOM. Adopted by React, Vue, other frontend frameworks.mount: Runtime renderer walk a virtual DOM tree and construct a real DOM tree from it.patch: Given two copies of virtual DOM trees, renderer walk and compare the two trees, figure out difference, apply changes to actual DOM.

The VDOM gives the ability to programmatically create inspect and compose desired UI structures in a declarative way (and leave direct DOM manipulation to renderer).

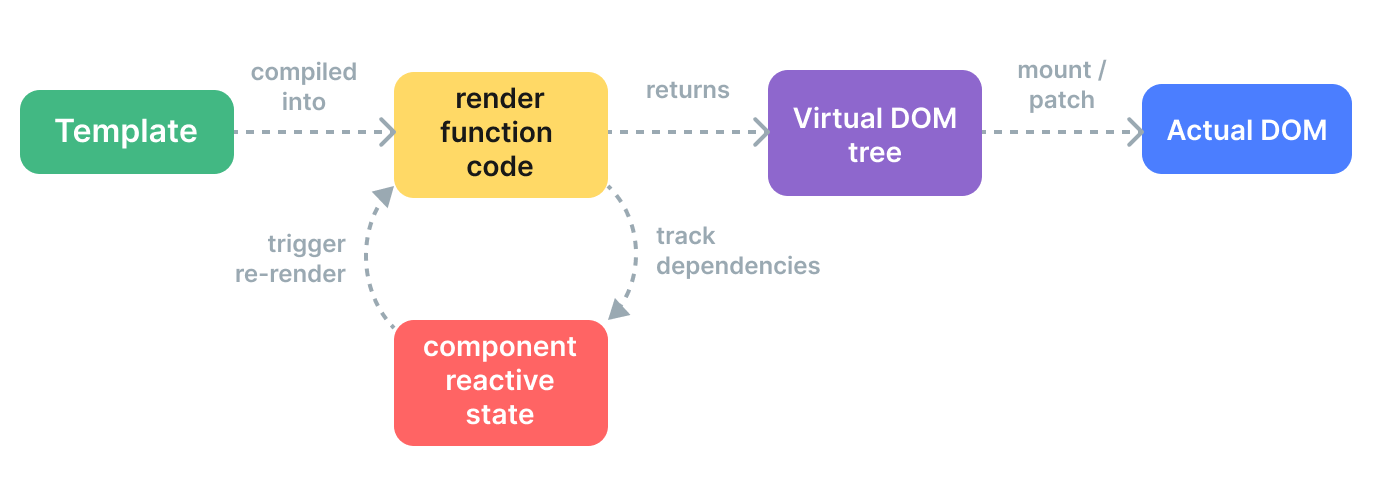

Render Pipeline What happens when Vue Component is Mounted:

- Compile: Vue template compiled into render function, functions that return VDOM trees. (Done ahead of time in MarkBind)

- Mount: Runtime renderer invoke render function, walks VDOM, creates actual DOM node.

- Patch: When dependency used during mount changes, effect re-runs, new updated VDOM created. Then, patch the actual DOM.

Vue Server Side Rendering (SSR)

It is possible to render the Vue components into HTML strings on the server, send directly to the browser and finally 'hydrate' static markup into fully interactive app on the client.

Advantages of SSR:

- Faster time to content, especially on slower devices

- Unified Mental Model using same language and same declarative

- Better SEO since crawlers see fully rendered page

Roles of Server and Client in SSR:

SSR: The server's job is to:

- Compile the Vue template into a render function.

- Use the render function to generate static HTML.

- Send the static HTML to the browser.

Client-Side Hydration: Once the browser receives the static HTML from the server, the client-side Vue app takes over. Its job is to:

- Attach event listeners and reactivity to the static HTML.

- Make the app interactive (e.g., responding to user actions like clicks or input).

Vue 3 createApp() vs createSSRApp() createApp does not bother with hydration. It assumes direct access to the DOM, creates and inserts its rendered HTML. createSSRApp() used for creating Vue application instance specifically for SSR, where inital HTML is rendered on the server and sent to client for hydration. Instead of rendering (creating and inserting whole HTML from scratch), it does patching. It also does initialization by setting up reactivity, components, global properties etc, event binding during the mount process (aka Hydration).

External Packages used by MarkBind

live-server– A simple development server with live reloading functionality, used to automatically refresh the browser when changes are made to MarkBind projects.commander.js– A command-line argument parser for Node.js, used to define and handle CLI commands in MarkBind.fs(Node.js built-in) – The File System module, used for reading, writing, and managing files and directories in MarkBind projects.lodash– A utility library providing helper functions for working with arrays, objects, and other JavaScript data structures, improving code efficiency and readability in MarkBind

Research on Other SSGs

While working on Markbind, I thought that it would definitely be essential to survey other Static Site Generators and the competition faced by MarkBind.

Researching other SSGs available (many of which are open source as well) has allowed me to gain a broader picture of the roadmap of MarkBind.

For example, Jekyll is simple and beginner-friendly, often paired with GitHub Pages for easy deployment. It has a large theme ecosystem for rapid site creation. Hugo has exceptional build speeds even for large sites. Other SSGs offer multiple rendering modes (SSG, SSR, CSR) on a per page basis, support react etc. Considering that the community for all these other SSGs are much larger and they have much more resources and manpower to devote, I thought about how MarkBind could learn from these other SSGs.

Insights that could be applied to MarkBind

Overall, some insights that can be applied to MarkBind would be to:

- Focus on Content-Heavy Instructional Websites

- Double down on features tailored for educational, project documentation, and course websites.

- Highlight built-in components like popovers, tabs, and collapsible panels as unique differentiators.

- Emphasize "Out-of-the-Box" Functionality

- Simplify onboarding and documentation for new users.

- Provide all essential features for documentation by default (e.g., diagrams, code snippets, multi-level navigation).

- Position MarkBind as a solution that minimizes configuration while maximizing flexibility.

- Provide beginner-friendly guides and videos for quick adoption.

- Develop Pre-Built Templates

- Create specialized templates for use cases like course sites, research documentation, or user guides.

- Create ready-made themes/templates focused on education and documentation.

- Allow users to deploy quickly with minimal setup.

General Development Knowledge

CommonJS and ESM

CommonJS (CJS) is the older type of modules and CJS were the only supported style of modules in NodeJS up till v12.

- Use the syntax

requireandmodule.exports = {XX:{},} - Global, synchronouse require function added to import other odules.

- mark the file as a CJS module by naming as

.cjsor by using typecommonjsin package.json.

EcmaScript Modules (ESM) standardized later and are the only natively supported module style in browsers. It is the (EcmaScript standard) JS standard way of writing modules/

- use

import { XXX } from YYY(top of file),const { ZZ } = await import("CCC");andexport const XXX = {}. - Syntax addition to JS and allows to easily import and export static members.

Issues I faced:

- I didn't realize tha my TypeScript code was being compiled to CommonJS (

require) instead of ES module syntax (import), and henceimportwas not working correctly. - Had to change the

tsconfig.jsonsettings appropriately.

Classic Scripts vs Module Scripts in JS

JavaScript offers two script types: module and non-module. (For web pages, JavaScript is the Prog. Lang for the web after all).

Module Script Files: use ES Modules (import/export), run in strict mode, and have local scope, making them ideal for modern, modular applications. They load asynchronously and are supported in modern browsers and Node.js (with .mjs or "type": "module").

- Scope: Local (encapsulated)

- Execution: Strict mode by default

- Loading: Asynchronous, deferred

- Reusability: High (modular)

- Browser Support: Modern browsers

- Node.js: Native (

.mjsor"type": "module")

Non-Module Script File rely on global scope, lack strict mode by default, and load synchronously. They work in all browsers and use CommonJS (require) in Node.js, making them suitable for legacy projects or simple scripts.

- Syntax: No

import/export - Scope: Global (pollution risk)

- Execution: Non-strict by default

- Loading: Synchronous by default

- Reusability: Low (global dependencies)

- Browser Support: All browsers

- Node.js: CommonJS (

require)

Use modules for modern, scalable apps and non-modules for legacy compatibility or simpler use cases. Transition to modules for better maintainability.

TypeScript

TypeScript has two main kinds of files. .ts files are implementation files that contain types and executable code. These are the files that produce .js outputs, and are where you normally write your code. .d.ts files are declaration files that contain only type information. These files don’t produce .js outputs; they are only used for typechecking.

DefinitelyTyped /

@types: The DefinitelyTyped repository is a centralized repo storing declaration files for thousands of libraries. The vast majority of commonly-used libraries have declaration files available on DefinitelyTyped.Declaration Maps:

.d.ts.mapDeclaration map (.d.ts.map) files also known as declaration source maps, contain mapping definitions that link each type declaration generated in .d.ts files back to your original source file (.ts). The mapping definition in these files are in JSON format.- This is helpful in code navigation. This enables editor features like “Go to Definition” and Rename to transparently navigate and edit code across sub projects when you have split a big project into small multiple projects using project references.

CHEAH GEE NUNG, IAN

Tool/Technology 1

List the aspects you learned, and the resources you used to learn them, and a brief summary of each resource.

Vue

1. Components of Vue

A Vue component typically consists of three main sections.

- Template: this defines the HTML structure

- Script: Contains the logic and data for the component

- Style: Defines the CSS specific to the component

2. Using Computed Properties in Vue.js

When doing experimental changes, I thought of letting users specify things like font size, font type, etc. Upon looking up the other components and stackoverflow, this is what I found

- In a basic Vue component, we can define a computed property by plaing it in the

computedoption. These properties are automatically updates when the underlying data changes.

3. Using Computed Properties vs Lifecycle Hooks

While working on PR, I learned about different approaches to access DOM elements.

In the TabGroups.Vue file, the original approach is to do

computed: {

headerRendered() {

return this.$refs.header.textContent.trim();

}

}

By having the headerRendered() under computed:, the benefit is that this is reactive by nature, it automatically updates when dependencies change. However, it can cause errors if accessed before DOM is ready.

To solve the issue of Tabs not showing correctly, this is the approach we adopted:

mounted() {

this.headerRendered = this.$refs.header.textContent.trim();

}

In this new approach, it guarantees DOM availability, which is better for one-time calulations. However, there are cons as well. This is not reactive, it requires manual upates if content changes.

I would summarize my learning in the following 3 points:

- Computed properties are best for reactive data that needs to stay synchronized

- Lifecycle hooks like

mountedare safer for DOM-dependent operations - $refs are only populated after the component is mounted

Here are the resources that I referenced while working on the issue

4. My Vue2 vs Vue3 research over the recess week

Since gerteck was working on Vue2 to Vue3 migration, I explored some differences between the 2 over the recess weeks and here are my gained knowledge.

- Reactivity System

- Vue2: uses

Object.definePropertyfor reactivity - Uses ES6

Proxy, enabling reactivity for dynamically added properties

- Composition aPI

- Vue2: Options API only (

data,methods,computed) - Vue3: Introduces Composition API (

setup()), allowing better logic reuse

- Performance

- Vue2: Slower cirtual DOM diffing.

- Vue3: Faster due to optimized virtual DOM and tree-shaking support

- Fragments

- Vue2: Requires a single root element in templates

- Vue3: Supports multiple root nodes (fragments).

Here are some documentations I referenced

HTML & CSS

1. Adding Hyperlinks in HTML and Markdown

When writing in Markdown, hyperlinks are created using a specific syntax, but behind the scenes, this Markdown code is converted into HTML.

In Markdown, we use syntax like

[Java Docs](https://docs.oracle.com/javase/8/docs/api/java/lang/String.html)to create a hyperlink. When the Markdown is converted to HTML, it generates an anchor tag in the form of<a href="https://docs.oracle.com/javase/8/docs/api/java/lang/String.html">Java Docs</a>. This would open the link in the same tab, as no additional attributes are specified.In contrast, when we write HTML durectly, we can specify additional attributes, such as

target="_blank", to control how the link behaves. Using the same example,<a href="https://markbind.org/userGuide/templates.html" target="_blank">User Guide: Templates</a>will ensure that the link opens in a new tab.

2. Rendering of font awesome icons using CSS

In one of my deployment on netlify, some of which did not display the font-awesomes icons properly, leading me to research on them.

Each font awesome (fa-linkedin, fa-github) is mapped to a Unicode character in the font file. For example,

when running the HTML code <i class="fa-house"></i>, CSS will first apply the fa-solid class based on its

mappings, CSS will also set aside the unicode charater for fa-house. The browser loads the web font fa-solid-900.woff2 and displays the icon.

What is woff2?

WOFF2 is a webfont file format, and it is a more compressed version of WOFF and is used to deliver webpage fonts on the fly. In the context of rendering font-awesome, font awesome icons are stored as glyphs in WOFF2 font files, when running <i class="fa-house"></i>, the browser loads fa-solid-900.woff2 if it is supported.

This page page is pretty useful

Web Architecture Concepts

1. Server-side Rendering(SSR) vs Client-side Rendering(CSR)

While investigating how components behave in Vue, I came across 2 interesting concepts - Server-Side Rendering (SSR) and Client-Side Rendering(CSR), especially in the context of statc site generation with Markbind. (interestingly, I got asked this alot during interviews when explaining Markbind)

SSR refers to HTML content on the server and sending the fully rendered page to the browser. This leads to faster initial page loads and better search-engine optimization (SEO), since search engines can search for contents drectly. Markbind takes a similar apporach - content is pre-rendered during build time, allowing static HTML to be servd efficiently without requiring JS to render everything on the client

CSR, on the other hand, renders the content in the browser using JS after the initial page load. This allows for highly dynamic, interactive applications but can result in slower time-to-content and challenges with SEO unless additional tools like prerendering are used.

By comparing these approached, it gave me a better understanding on why MarkBind's static generation strategy works well for documentation websites. These are cases where the content is relatively stable and fast load times are prioritized.

Here are some resoruces I referenced:

npm

In the beginning of the project, I ran npm commands by following instructions on the developer guide, without deep understanding of what each of them does. Here are what I learnt about npm and the different commands I frequently used.

1. What is npm

npm (Node Package Manager) is a command-line tool and online registry that allows developers to install, manage, and share packages for their Node.js applications. It simplifies the management of dependencies and project automation through scripts, helping to ensure consistent evnvironments and automate repetitive tasks like testing, building and deployment.

2. npm link

What it does:

npm linkis udes to symlink a local package for development purposes. It creates a global symlink to a local package and links it into another project, allowing us to test changes to a package without needing to reinstall it. How I used it- One example would be when I am testing the version of markbind. I used

npm linkto connect my local development version of MarkBind, to the CS2103T website. This allows me to serve the CS2103T website locally, to check for any regression issues.

3. npm run build:backend

What it does:

npm run build:backendis a custom npm script defined in MarkBind. This command is used to compile server-side code, to help build static files How I used it:- When I make changes to any

.tsfile, the changes will not be reflected after saving those cahnges and serving the test site. Instead,npm run build:backendneeds to be run before the new changes can be reflected

Cheerio

1. What is Cheerio

Cheerio is a fast, lightweight library for parsing and manipulating HTML and XML on the server side, using a jQuery-like syntax. It is built for Node.js and is ideal for use cases like:

- Server-side HTML manipulation

- Web scraping

- Static site generation tools Here are some resources I referenced when working with Cheerio

- Cheerio docs

- Cheerio github

2. Usage of Cheerio in markbind

While working on the PR #2649 Branch inline highlight new I gained deeper on how Markbind uses highlight for various types of inline highlighting.

- Whole line highlight

- Whole text highlight

- Partial text highlight - This is where Cheerio is used

For partial text highlight, Cheerio is used to dynamically parse and manipulate the HTML content, with the following steps

- It parses the rendered HTML to locate the specific section that needs to be highlighted

- Then it wraps the portion with the appropriate

<span>or similar tags to apply the highlight styling. - This manipulation is done server-side before the final HTML is served

Tooling and workflow

1. Lerna

While working with PR #2647 Remove parallel flag from test scripts, I experimented with Lerna's --parallel flag which runs tasks across packages concurrently. Here's what I learnt while playing around with it

- The

--parallelflag speed up execution but can cause interleaved logs, making test failures harder to trace Through my own research, I believe Lerna achieves this concurrency with the help of Node.js's single-threaded event loop architecture.

Here are the docs I referenced:

- https://www.linkedin.com/pulse/oncurrency-node-js-khaleel-inchikkalayil?utm_source=chatgpt.com

- https://levelup.gitconnected.com/exploring-parallelism-and-concurrency-in-node-js-4b84c2f397b

2. CJS vs ESM: Differences and Implications

In JavaScript, there are 2 major module systems for managing dependencies and code: CommonJS(CJS) and ECMAScript Modules(ESM)

- CJS

This is the traditional module system used in Node.js. It uses

require()to load modules andmodule.exportsto eport functionality. Example:

const fs = require('fs');

const myFunction = require('./myModule');

module.exports = { myFunction };

Key features include:

require()loads modules synchronously, blocking execution until the module is fully loaded.require()can be called anywhere in the code- It is the default module system in Node.hs until ESM became more widely supported

CJS is ideal for traditional Node.js environments, especially when working on backend systems where synchronous loading is acceptable

- ESM ESM is the official JS module introduced in the ES6 specification and is now the standard for JS modules. The usage of ES6 differs from CJS. Using the same example as above, we got:

import fs from 'fs';

import { myFunction } from './myModule.js';

export { myFunction }

Key features include:

- Modules are loaded asynchronously using the

importstatements - `supported natively by modern browsers and increasingly in Node.js as of version 12 and beyond

ESM is the preferred choice of JS applications, especially in frontend development and for projects that need to take advantage of tree shaking (the process of elimination dead code from the final JS bundle) and performance optimization

Here are some resrouces I referenced:

JAVIER TAN MENG WEE

CSS

CSS (Cascading Style Sheets) is a stylesheet language used to control the presentation of HTML documents.

word-break property: The word break property provides opportunities for soft wrapping.

- Different languages can specify different ways of breaking a sentence of text

- Significance comes from deciding how to break up a word either by character or word.

- For instance, in some languages like Ethopic, it has two styles of line breaking, namely by word seperators or between letters within a word.

- Markbind is mainly catered to English content and thus specifies line breaks at spaces.

Differences in render environment

Safari, Chrome and Firefox are commonly used web browsers. They all adhere to web standards, however they face differences in terms of how they handle the rendering of a web application.

The different rendering engine that each browser is built on can cause different rendering outcomes for different functions. Browsers can intepret the different CSS styles differently

Vue

Vue is a JavaScript framework that is used to create interactive interfaces. it is built on top of standard web tech like HTML, CSS and JavaScript and enhances them with its API. Vue has features such as reactivve rendering, allowing for components to update automatically without needing any manual developer manipulation as well as component-based architecture promoting reuse and modularity in applications.

Markbind uses Vue for most of its frontend components, mainly with the Options API of Vue.

<template>

This is the content that gets rendered into the DOM. It takes on regualar HTML syntax alongside Vue-specific syntax (Vue directives) to bind data, handle events and conditionally render elements.

Some useful vue directives are:

v-for: Loop through a listv-if: Conditionally render an element (removes from DOM)v-else: Used afterv-ifto handle the "else" casev-show: Conditionally display an element (usesdisplay: none)v-bind: Bind a value to an attribute dynamically

<script>

This is the part that is used to handle logic of my component. It is also used to give components "reactivity". For example, declaring props, data, writing methods, importing libraries or vue components, handling lifecycle hooks, generating constants, etc.

Options:

data- Used to declare reactive states of a component.mounted- Lifecycle hook that runs after the component is mounted to the DOM. Useful to call methods to populate variables defined indata.props- Defines external data passed into the component from the parentcomponents- Registers child components used inside the current componentmethods- Defines functions that can be used inside the templatecomputed- Declares derived values that update reactively based ondataorpropsProperties are being passed to vue components as props. These properties specifies the different configurations of the html templates.

Content passed by the

slotsAPI are considered to be owned by the parent component that passes them in and so styles do not apply to them. To apply styles to these components, target the surrounding container and then the style using a CSS selector such as.someClass > *

<style>

This defines the css styles that can be applied to this current component. If no style is defined, then it will just inherit any global or parent styles that applies.

Virtual DOM

“virtual” representation of a UI is kept in memory and synced with the “real” DOM

- Mounting - A runtime renderer walking through the virtual dom and construct an actual dom tree from it

- Patching - Two copies of virtual DOM trees walked and compared differences are found and changes are applied to the actual DOM

Main benefit of virtual DOM is that it gives the developer the ability to programmatically create, inspect and compose desired UI structures in a declarative way, while leaving the direct DOM manipulation to the renderer

How Vue components are mounted

- Compilation - Vue templates are compiled into render functions. The render functions are used to generate virtual doms

- Mounting - render function is called, and virtual dom is walked to create actual dom

- Performed as a reactive effect, keeps track of all reactive dependencies used

- Patch - a dependency used during mount changes, the effect re-runs → a new, updated Virtual DOM tree is created and patching is done

Rendering on the server side (SSR)

Reference By default, the DOM is produced and manipulated directly on the browser. This can be slow especially in the case of large DOMs. Vue supports server side rendering where the DOM is generated and HTML strings are created on the server, sent over to the browser and "hydrate"-ed into an interactive application on the client side by inserting all the reactive elements, listeners, etc.

Advantages:

- Faster time to content

- Unified mental model

- Better SEO

Tradeoffs:

- More involved build setup and deployment requirements

- Development constraints, Browser-specific code can only be used inside certain lifecycle hooks

- More server-side load

Testing:

Vue component test utilities library: Wrapper

According to my current understanding:

- Testing is done by first creating a wrapper with the component to be tested.

- The

$nextTick()function of the vm of the wrapper is then called which waits for the next DOM update flush. - The generated HTML is then compared with the snapshot that is generated.

DevOps

Github Actions is used when writing workflows. It allows for automating the building, testing and deployment pipelines. Markbind uses it for various purposes:

- Testing (ensuring existing/new test cases pass)

- Automated replies (Checking of new user)

- Ensuring workflow procedures are followed (Checking for missing pr information)

GitHub Actions details:

- Workflows are defined using YAML

- They are trigered by

eventsthat is used to automate checks. Some events include pushes, pull requests, issues, and more. - Workflows can make use of GitHub Actions context variables to gain information about the workflow runs, variables, runner environements, jobs and steps.

Context Variables:

github context is freuqently used for retrieving useful information of the current workflow run. Some examples used(but not limited to) include :

github.actoris used to detect the username of the user that triggered the workflow event. It can also be used to detect bots who trigger the events.github.event_nameis used to detect the name of the event that triggered the workflow. In the context of markbind, this is often used to check if the triggered workflow is of a particular event (such as pull request) before running the script.

A potential limitation arises when using github.actor to detect bot accounts. That is, if the bot is a github account that is automated by a user. In this case, github currently has no way to detect such accounts.

- Proposed potential workaround: Manually identify the human bot accounts.

Bots

Markbind uses the all-contributor bot to add contributors to automate the process of adding contributors to the project

LocalHost

Local testing of sites often uses localhost to run up a local server. This often resolves to the IP address of 127.0.0.1.

Markbind allows users to specify the address of localhosts in the IPV4 format. It does not support specifying IPV6 IP addresses.

- IP addresses that starts with 127 are reserved and are “local loopback addresses”, this means it references a device on the private , local network

- Outside devices cannot reach local loopback addresses, making it suitable for testing.

- Locally, localhost acts as the domain name for the loopback IP address 127.0.0.1

CORS (Cross-Origin Resource Sharing)

Cross origin resource sharing (CORS) is a mechanism that allows a web client to requests for resources over the internet. This can be things like third party APIs, videos, fonts, etc.

Cross-origin: A resource URL that is not the same as the URL of the visited browser.

Importance of CORS

The issue of cross site forgery issues: malicious attackers can trick users to execute unwanted actions on a web application while being autenthicated. For instance, if a user is logged into a banking application, the attacker can trick the user into loading an external website on a new tab. Then, using the cookie credentials of the user, the attacker can impersonate the user and access banking information of the user.

One solution built around this issue is the same origin policy. This policy specifies that clients can only request for a resource with the same origin as the client's url.That is, the protocol, port and hostname of the client's URL should match with that of the server. Any cross origin requests are not allowed by the browser. This means that any website you visit on the browser cannot access any other resource such as your email invox or online banking application as they belong to a seperate origin.

Same origin policy is like a ninja working in the shadows, preventing malicious users from trying to attack you. However, such tight restrictions means browsers are not able to access data across different origins. This may be necessary for applications that rely on external services such as calling third party APIs. This is where CORS come into place - it extends SOP by relaxing SOP in a controlled mannner.

The workings of CORS

CORS is primarily used when there is a need for a browser to pull or share data from an external resource. The request-resposne process is as follows (assume HTTP):