Knowledge gained from Projects

CATcher:

MarkBind:

RepoSense:

TEAMMATES:

- Chang Weng Yew, Nicolas

- FANG JUNWEI, SAMUEL

- JAY ALJELO SAEZ TING

- LIU ZHUOHAO

- MOK KHENG SHENG FERGUS

- ZHAO JINGJING

- Zhang Ziqing

CATcher

Goh Yee Chong, Gabriel

Error messages and Hint labels in Angular forms

Forms have a FormGroup, where each part of the form is controled by a FormControl component.

In the ts file, Validators check and ensure the form is valid for submission. If it is not, the submit button is greyed out.

In the html file, the individual form input boxes are built, and shown with *ngIf statements. <input> also has additional parameters to specify whether the input is required and the max length of the input. The form error messages are programmed here in the html file, for example:

<mat-error *ngIf="title.errors && title.errors['required'] && (title.touched || title.dirty)">

Title required.

</mat-error>

Hint labels can be used to show the remaining characters in a input box with limited characters when approaching that limit.

While a string with validators could be used to instantiate a FormGroup, a FormControl element ensured that validators are processed such that the form error messages are shown in components that are children to other Angular components. (PR #861)

Resources:

Lifecycle Hooks in Angular

After a component is instantiated, the ts file has lifecycle hooks in the form of methods that initialize or modify the component content or state. These methods are prefixed with ng.

The sequence in which these lifecycle hooks are called:

- OnChanges

- OnInit

- DoCheck - repeated

- AfterContentInit

- AfterContentChecked - repeated

- AfterViewInit

- AfterViewChecked - repeated

- OnDestroy

Most notably used is ngOnInit, which used to instantiate the component variables. In CATcher, ngAfterViewInit is also used to load issues after the component has been initialized.

Resources:

- Lifecycle Hooks

- Angular lifecycle hooks explained

- What's the difference between ngOnInit and ngAfterViewInit of Angular2?

- Video: Angular - Zero to Hero - Life Cycle Hooks

ViewChild in Angular

While html files can add custom child components using custom defined decorators such as <my-custom-component>, the parent component may need references to these children components to add change content or add interactability. ViewChild, ContentChild are queries to access child components from the parent component.

For example:

@ViewChild(ViewIssueComponent, { static: false }) viewIssue: ViewIssueComponent;

Static vs Dynamic queries

Static queries are queries on child components that do not change during runtime, as such result of the reference to the child component can be made available in ngOnInit.

Dynamic queries are queries on child components that change during runtime, so reference to child component can only be made available in ngAfterViewInit.

Resources:

- Angular @ViewChild

- Static query migration guide

- Video: Better concepts, less code in Angular 2 - Victor Savkin and Tobias Bosch

- What's the difference between @ViewChild and @ContentChild?

Property Binding

Square brackets in html tags in angular indicate that the right hand side is a dynamic expression. For example:

<span

(click)="$event.stopPropagation()"

*ngIf="issue.testerDisagree"

[ngStyle]="this.labelService.setLabelStyle(this.labelService.getColorOfLabel('Rejected'))" >

The dynamic expression can be evaluated in the context of the corresponding .ts file of the html file.

Event binding

Parenthesis within html tags, for example (click) are used to call the component's corresponding method on click. In the example above, $event.stopPropagation() is a Javascript call that prevents the label Disagree within the issue bar from being clickable because its parent is clickable. The click event from parent is stopped from propagating to this particular child.

Resources:

- Angular Doc Property Binding

- Angular Doc Event Binding

- StackOverflow simple summary

- StackOverflow Stop Propagation

Git Rebase

Below is a link to a good explanation with visuals to explain rebasing. Rebasing helped to clean the commit history of my main branch after accidental merging with other branches.

Resource:

Github File Upload API: CreateFile vs CreateTree

The API for committing a single file and committing multiple files at once are different. Attempting to do multiple single file commits in a short duration of time will likely cause HttpError to occur. The current recommeded fix is to put in sleep before the next single file commit, or merge multiple single file commits into a single multiple file commit.

Resources:

Lee Chun Wei

Observer design pattern (in rxjs)

In CS2103/T, I learnt about the Observer design pattern but I did not really see it being used often until I had to work with rxjs in Angular.

Observables are used in Angular to provide a form of lazy transfer of data to the observer in both a synchronous and asynchronous manner, in a beautiful functional programming style. For example, if we want to get API data of the authenticated user from github, we can call

const observable = from(octokit.users.getAuthenticated()).pipe(

map((response) => response['data']),

catchError((err) => throwError('Failed to fetch authenticated user.'))

);

from(...) will convert the Promise object octokit.users.getAuthenticated() into an observable. We can then change the values returned by this promise by using pipe(...). In the example, the map(...) function in the pipe will only retrieve the 'data' values of the response from the API call. Afterwards, we can subscribe to the observable somewhere else by calling subscribe(...). In the example below, we will subscribe to the observable and print the data to the console.

observable.subscribe((data) => console.log(data));

rxjs provides a wide range of transformation operators (such as map) that will make programming with lazy data (like asynchronous API calls) easier. For more information, visit https://rxjs.dev/guide/overview.

Logging

Though it might seem to be redundant at first, log messages are helpful to developers when users report a bug that cannot be resolved easily. In CATcher, logging is used at various levels, from logging error messages to logging network HTTP requests to the github server. The octokit API library that is used to perform API requests to the Github server allows developers to customize the various logging levels, so that there is no need to manually hard-code the logging messages for every method that has a Github API call. By separating the various logging levels, developers can easily filter through the less important messages if there is a critical error. There are usually 4 levels of logging for the console (other than the general console.log), shown below in increasing severity:

- debug: Provides useful information to the developer in development mode. Usually debug messages are not very useful in production, especially for CATcher, since they take up a lot of space in the log. An example of a debug message can be the HTTP request details of an API call to Github.

- info: Provides information about a less important event occuring or a state change. Such information is not very important to the developers, but in certain situations, the developer may find such messages helpful.

- warn: Provides an important warning to the developer if there is an unexpected situation or bad code, but the application can continue to work. Developers should take note of such warnings and address these warnings if possible.

- error: Provides an important error message to the developer if one or more components of the application fails and stop functioning properly. This usually constitutes as a bug that developers should work on promptly.

View Encapsulation

Suppose you want to create an Angular component which contains a child component that needs to be styled. The best way is to apply the CSS styles to the child itself. But sometimes, it is not possible or just too tedious. For example, in this CATcher PR, I want to apply markdown-like CSS styles to a custom generated HTML code. To do this, I have to put the HTML inside an article tag, set the class of the article to "markdown-body", and let the css file handle the styling. However, the CSS styles did not get applied to the generated HTML. Why?

To solve this issue, we will have to learn about view encapsulation in Angular. There are currently 3 types of view encapsulation in Angular: ShadowDom, Emulated (default) and None. The table below summarizes the different view encapsulation modes.

| View Encapsulation | Description |

|---|---|

| ShadowDOM | In Angular, ShadowDOM uses the browsers's internal Shadow DOM API to encapsulate/hide the Angular component's view inside a ShadowRoot and applies the styles specified only to the component itself. See mdn docs for more details on what is ShadowDOM. The drawback of this is that some browsers may not support ShadowDOM (but major browsers like Chrome and Firefox support ShadowDOM). |

| Emulated | This is the default view encapsulation in Angular. It emulated the behaviour of ShadowDOM, without using ShadowDOM. Angular does this by adding unique attributes to the component templates and edits the css by applying the attributes too. See the picture below for an example. |

| None | Perhaps the easiest to understand. Unlike ShadowDOM and Emulated, the HTML and CSS is untouched. However, this can cause the CSS to be applied to the entire webpage. We try to avoid using this if possible, in case of styling conflicts. |

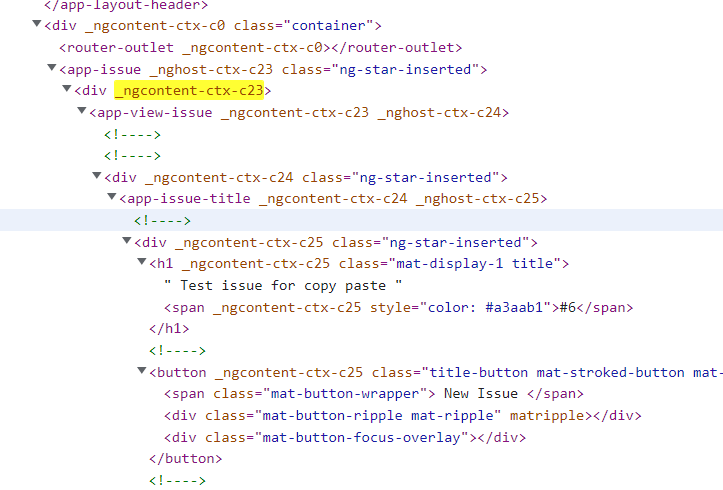

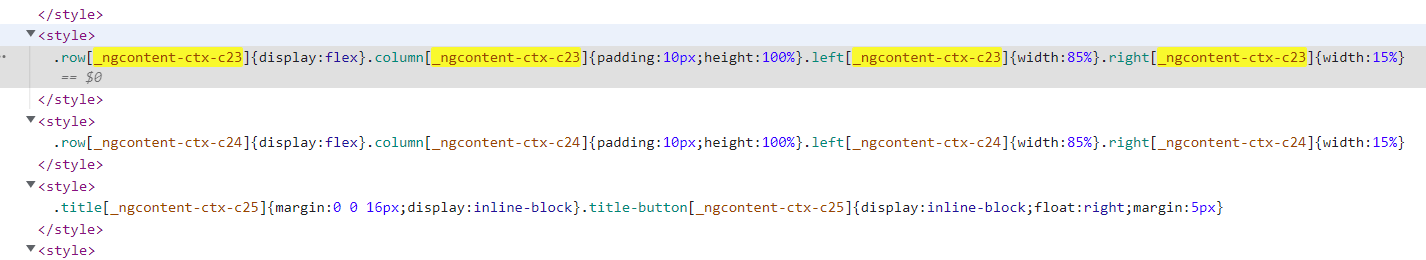

Example of emulated view encapsulation:

Notice that there is a special _ngcontent-ctx-c23 attribute applied to the elements within app-issue component, while the app-issue element itself acts as a "shadow host", hence the attribute _nghost-ctx-c23. However, the content of the child component app-issue-title will not have this attribute. In the bottom picture, we see that Angular has changed our CSS styles such that it will only be applied to elements with attributes such as _ngcontent-ctx-c23. This prevents the style from being applied throughout the web page, hence emulating the behaviour of ShadowDOM.

Going back to the initial question, why were the styles not applied to the generated HTML? This is because we were using the default emulated view encapsulation, so the imported CSS file will be edited to include the unique attribute _ngcontent-ctx-cXX. The generated HTML however, does not have this attribute. Therefore, we will need to set the view encapsulation to None, so that we can apply the markdown-like CSS styles to the generated HTML.

Authentication and authorization

In CATcher, we need to perform authentication to ensure that users who log in are Users are actually who they claim to beauthentic. Authorization is also needed to ensure that only the relevant students, tutors and admins can log in to CATcher. To achieve this, CATcher uses Github OAuth to authenticate and authorize users.

OAuth is an open-standard protocol for authorization. By using Github OAuth, the user first authenticates himself by logging into his own Github account. Then, Github will ask the user for permission to allow CATcher to access certain content and perform certain actions. By accepting the permission request, Github will authorize CATcher to act on the user's behalf, for example, to create a new issue during PE. Here are the details behind the OAuth process when a user tries to log into CATcher:

- User selects a session and logs in.

- CATcher will redirect the user to Github to log in, and specify the scope of permissions needed for CATcher.

- The user is redirected back to CATcher. Github will send an authorization code to CATcher.

- CATcher will request for an access token from Github. However, it has to do so through a backend server (gatekeeper).

- The browser receives the access token from Github via gatekeeper. This access token will be used to authenticate future Github API requests made by the user.

Thanks to OAuth, users can grant third-party applications permissions to access, modify or delete content on another account. CATcher benefits from this because users do not need to use the Github interface directly to do their PE.

However, the access token must be securely kept, since anyone who has the access token can perform actions on behalf of CATcher.

More resources here: Github OAuth guide, IETF RFC6749 document

Lee Xiong Jie, Isaac

CSS Flexbox

Flexbox is used to order, align and space out items in a one dimensional container (i.e. a row or a column), even when the size of the items are unknown or dynamic.

Items can be given a default size (flex-basis), and can also grow (flex-grow) and shrink (flex-shrink) according to the extra space in the container (or lack thereof). These three properties can be controlled with the flex property, e.g.

.item {

flex: 0 1 10%

}

where the three parameters correspond to flex-grow, flex-shrink and flex-basis respectively. In this case, the default width of the item is 10% of the width of the container, and it does not grow nor shrink relative to the other items (assuming the other items also have their flex property set to 0 1 auto).

The flex-basis property can also be set to the content keyword, where the width of the item is based on the content within the item (e.g. if it contains text, then the width of the item is the length of the text string). This allows for dynamically sized items within the container, which may enable the layout to look cleaner.

For a helpful summary of flexbox properties, visit https://css-tricks.com/snippets/css/a-guide-to-flexbox/

Prettier, husky and pretty-quick

Prettier is a tool to format code according to a given code style automatically. Unlike a linter such as TSLint, prettier only cares about formatting, rather than detecting programming errors. Prettier supports both Typescript and Angular, which CATcher is written in.

Since it is quite wasteful to run prettier to format the entire codebase every time a change is made, so a more efficient method is to format the codebase once, and then format only the changes made during each commit.

This is where the husky tool comes in, which enables hooks to be run before each commit. The relevant hook here is pretty-quick, and this formats the changed/staged files during each commit. This frees developers from having to fuss with maintaining code formatting or fixing formatting mistakes, leading to less frustration.

For more information, visit Prettier and husky

Arcsecond

Arcsecond is a zero-dependency parser combinator library for Javascript that is now being used in CATcher to parse GitGub issues and comments.

Previously in order to parse the comments, we used the regex string (${headerString})\\s+([\\s\\S]*?)(?=${headerString}|$)\gi which is neither human readable nor maintainable. In addition, this string only finds matches - more regex is used to extract relevant information from the comments.

In comparison, arcsecond offers human friendly parsers such as str, char, letters, digits, between and so on, and these parsers can be composed and run in sequence. This makes building and maintaining parsers much easier. In addition, arcsecond also allows you to extract certain information from a string (as opposed to the entire string) by way of the coroutine parser.

For example, take the string "Today is Wednesday and the weather is 30 degrees Celsius", and you want to extract the day (Wednesday) and the temperature (30). One parser that can achieve that is:

const dayWeatherParser = coroutine(function*() {

yield str("Today is "); // parse and ignore

const day = yield letters; // parse 'Wednesday' and store

yield str(" and the weather is "); // parse and ignore

const temperature = yield digits; // parse '30' and store

yield str(" degrees Celsius"); // parse and ignore

return {

day: day,

temperature: temperature

}

})

This allows us to build complex and versatile parsers easily, yet in a way that is clear and understandable. For more information, check out the tutorial here

Jasmine

Jasmine is a testing framework for Javascript code. In Jasmine, test suites are functions in describe blocks, and each spec is also a function in an it block.

For example, here is a suite that tests a certain function:

function testMethod() {

return true;

}

describe("testMethod suite", () => {

it("testMethod should return true", () => {

expect(testMethod()).toBe(true);

});

});

Expectations are built with the function expect which takes a value (testMethod() in the example above), and is chained with a Matcher such as toBe, toEqual or toBeGreaterThan. This provides greater flexibility than say JUnit's assert methods, since one assert method corresponds to one condition.

Since test suites and specs are functions, normal Javascript scoping rules apply, so variables can be shared between specs. In addition, there are separate setup and teardown methods such as beforeEach and afterAll.

For more information, check out the Your First Suite tutorial here

MarkBind

Hannah Chia Kai Xin

Frontend

Rounded vs Square edges for signalling functionality

Researching whether to use rounded corners, sqared off corners, or fully rounded boxes was interesting from a usability perpective. Some resources I used to learn about them:

- https://ux.stackexchange.com/questions/40744/mixing-rounded-corners-and-square-corners

- https://medium.com/@carolinalina/how-to-design-ui-buttons-that-convert-d5ebb1080969

- https://prototypr.io/post/the-rounded-user-experience/

The information from https://uxdesign.cc/make-sense-of-rounded-corners-on-buttons-dfc8e13ea7f7 in particular made a case for fully rounded buttons for primary content when you have space to spare, to direct users attention to those buttons. They suggested to avoid fully rounded buttons when many are used next to each other as it may not be obvious which to click. I used this information to infer what the average user might takeaway if minimized panels were pills rather than rounded buttons.

Vue

- https://v1.vuejs.org/guide/instance.html

- Scoped styles: https://vue-loader.vuejs.org/guide/scoped-css.html, also informing the issue I created #1768 in MarkBind

- Learning about slots: https://learnvue.co/2021/03/when-why-to-use-vue-scoped-slots/#conclusion, https://www.smashingmagazine.com/2019/07/using-slots-vue-js/

Scrollbars

Using overflow-x: scroll on the default navbar, seemed to cause the dropdown to break.

After a few stack overflow posts and reading, I found this article: https://css-tricks.com/popping-hidden-overflow/ that explains that setting overflow-x sets overflow-y as well, and it's just not possible to have a dropdown peep out of a scrollable overflow without setting positions relatively. This discussion with the offered solution was also interesting.

I briefly explored existing libraries like https://floating-ui.com/. Libraries like this exist to make it easier to accomplish this surprisingly complex task.

I also learned about the accessibility of scrollbars (https://adrianroselli.com/2019/01/baseline-rules-for-scrollbar-usability.html) and (https://www.w3.org/WAI/standards-guidelines/act/rules/0ssw9k/proposed/), which discussed what goes into making scrollbars accessible. Visually, visible scrollbars provide an obvious indication that there is more content. These design tips on scrollbars (https://www.nngroup.com/articles/scrolling-and-scrollbars/) were also interesting, particularly the note to avoid horizontal scrolling wherever possible.

This informed my decision that it would be better not to make a scrollable navbar the default, but have a dropdown menu with more options for smaller screens

[]::webkit-scrollbar](https://developer.mozilla.org/en-US/docs/Web/CSS/::-webkit-scrollbar) pseudo-element does not work for all browsers and should be used with caution.

Open source dependencies

ghpages

Used when researching the deploy and build commands for MarkBind.

Commander

Used to write CLI programs.

- https://www.npmjs.com/package/commander

- https://en.wikipedia.org/wiki/Usage_message (conventions in defining parameters)

jest

Mainly studied the changelog to see if this would break when dependencies were updated.

- Introduction: https://jestjs.io/ and repository(https://github.com/facebook/jest)

- relevant blog post: https://jestjs.io/blog/2021/05/25/jest-27

- changelog

- Jest testrunners: they plan on changing the default test-runner from

jasmine2tojest-circusin version 27, withjasmine2to be discontinued afterwards. Though I think we're usingjasmine2and notjest-circus, but MarkBind we never explicitly specify a change from the default

fs-extra

Handy utility that I ended up using extensively

Git

CI pipeline (particularly with git):

- https://docs.github.com/en/actions/automating-builds-and-tests/about-continuous-integration, particularly the section on github actions

- Follow-up research about github actions

- Basic research about Travis CI and Netlify

Logging Framework

- https://www.sentinelone.com/blog/logging-framework/ as an introduction

- https://se-education.org/se-book/errorHandling/#-12 also as an introduction

- https://github.com/winstonjs/winston (library used with markbind)

Ways Versioning is Implemented

- Learn about semantic versioning: https://semver.org/

- Alternate versioning solutions:

Javascript

Javascript with regard to object oriented programming

Looking into this was inspired by the issues on refactoring the large core/site/index.js file which is over 1.5k lines into more manageable class. At present, most of the file is made up of the Site class, which makes sense from an object oriented perspective. All the functions which are supported by Site are things which affect what the site itself holds or does: generating itself, deploying itself, initialising itself.

One suggestion for refactoring would be separating out each command into separate files. We could abstract away the command logic might be separating each command into classes, having each command inherit from a Command class, and having the site class just generate and execute each command when it is called to do so. But is this necessary or desirable?

Java and Javascript are different in that Java is class based and Javascript is prototype-based. Class based languages are founded on the concept of classes and instances being distinct, where classes are an abstract description of a set of potential instances which have the properties defined in the class - no more and no less. Prototype based languages have a 'prototypical object' which is the template used to create a new object, but once you create it or at run time the object can specify its own additional properties and be assigned as the prototype for additional objects (source: mozilla, class-based vs prototype based languages)

Nevertheless, Site.js does use "classes" of managers to manage externals, etc, so perhaps in production avoiding classes is not a big deal. Would still be a useful abstraction to manage the complexity of the file.

Certain functions in javascript

Jovyn Tan Li Shyan

Vue Lifecycle Hooks

I learnt about Vue's lifecycle hooks while working on popovers as I was facing errors with undefined instance properties.

For instance, the $el property is only resolved after the instance is mounted.

When working with instance properties such as $el, I also learnt more about the different lifecycle hooks and when different properties are instantiated.

I came across this important note while browsing the docs on lifecycle hooks:

Don’t use arrow functions on an options property or callback, such as

created: () => console.log(this.a)orvm.$watch('a', newValue => this.myMethod()). Since an arrow function doesn’t have a this, this will be treated as any other variable and lexically looked up through parent scopes until found, often resulting in errors such asUncaught TypeError: Cannot read property of undefinedorUncaught TypeError: this.myMethod is not a function.

Resources

Vue Templates & Slots

Templates/slots are a way to pass content from parent to child components, and are used by bootstrap-vue (in place of props) in cases that require reactivity. Using slots could make the code more readable. It's also a convenient way to pass components into another component, and enables reactivity in the popover use case.

Resources

Data attributes

I came across the use of data attributes (data-*) to pass data between different components/nodes in MarkBind.

I thought it was an interesting feature of HTML that allows for extensibility as users can store extra properties on the DOM without interfering with standard attributes.

It also guards against future changes in standard HTML attributes (i.e., avoids the problem of using a non-existent attribute that may become a standard attribute in a future version of HTML).

This was something that I was previously not aware of so it was interesting to see how it could be used in a practical scenario.

Resources

Server-Side Rendering

Some things that helped me solve SSR/hydration issues this week:

- Conditionally render data when it has been fully loaded

- Take note of the different lifecycle hooks (

mountedonly runs on the client)- Vue's docs for lifecycle hooks (linked above) were once again a good resource

- Take note of how the Vue component will be compiled, ensure that the HTML is correct and aligns on both client- and server- side

Resources

- Data reactivity on the server

- Data reactivity is unnecessary on the server, and is disabled by default.

mountandbeforeMountwill only be executed on the client

- Client-side Hydration

- Instead of throwing away the markup that the server has already rendered and re-creating all the DOM elements, we “hydrate” the static mark-up and make it interactive

- In development mode, Vue will assert that the client-side generated virtual DOM tree matches the DOM structure rendered from the server

- If mismatch: bail hydration, discard existing DOM, render from scratch

- This is disabled in production for performance reasons

- What to do when Vue hydration fails | blog.Lichter.io

- This resource was very helpful in debugging the source of hydration errors: Solving the hydration failure

Useful Git tricks

--skip-worktree

After adding some config files (e.g. .ignore for Ag), I wanted to ignore these config files to keep my git history clean.

I first tried using git update-index --skip-worktree to "hide" the .ignore file, but this didn't work because the file wasn't indexed yet (so update-index doesn't make sense).

Instead, the following approach worked:

- Add

.ignoreto.gitignore git update-index --skip-worktree .gitignore

- You can "undo" this in the future using the

--no-skip-worktreeflag

From here, git status shows no changes to the .gitignore file, and .ignore is no longer tracked.

Another option was --assume-unchanged instead of --skip-worktree (comparison is linked under "Resources").

Resources:

npm Dependencies

While updating package.json, I had to clarify the meaning of some versioning syntaxes:

~1.2.3means "approximately equivalent to v1.2.3" => accepts all future patch versions, i.e. ≥1.2.3 and <1.3.0^1.2.3means "compatible with v1.2.3" => accepts all future minor/patch versions, i.e. ≥1.2.3 and <2.0.0@org/package=> 'org' is a scope, and 'package' is a package name within the scope

Some learning points on the different types of dependencies:

- devDependencies - only required during development (e.g. external tests, documentation)

- installed on

npm install, unless the--productionflag is passed

- installed on

- peerDependencies - compatible with, but not required (e.g. the module may expose a specific interface specified by the host documentation)

- installed if missing, unless there is a dependency conflict

- bundledDependencies - will be bundled when publishing the package

- Can be specified as a boolean or an array of strings (package names).

truewill bundle all dependencies,falsewill bundle none and an array of package names specifies which packages to bundle.

- Can be specified as a boolean or an array of strings (package names).

Resources:

- Specifying version ranges for dependencies

- Scope

- devDependencies, peerDependencies, bundledDependencies

- Explanation on different types of dependencies

- When to use bundled dependencies

Simple scripting

Bash scripting was very useful when I needed to modify a large number of files (like vue.min.js for upgrading the Vue version, or bootstrap.min.* for upgrading Bootstrap).

I picked up a bit of bash scripting and used this to mass copy the minified files:

find . -name "*vue.min.js" | xargs -n 1 cp ~/Downloads/vue.min.js

find . -name "*vue.min.js"finds all filepaths ending withvue.min.js|uses the output offind . -name "*vue.min.js"as input for the right side|is called a pipe.- Redirects the left-hand side stdout to stdin of the right-hand side

xargs -n 1converts each line of input to arguments for the commandcp ~/Downloads/vue.min.jsxargsconverts input from stdin to arguments for a command.-n 1means to use only one argument for each iteration. Since arguments are space-separated, this essentially breaks the input up by lines-pcan be used to echo each command iteration and prompt a confirmation before proceeding. (yto confirm)

Another helpful command: using sed, delete all lines containing 'bootstrap-vue' in HTML files

find . -name "*.html" | sed -i '' '/bootstrap-vue/d'

find . -name "*bootstrap-vue.min.css" | xargs -n1 rm

Replace all instances of font-weight- with fw-:

ag -l 'font-weight-' | xargs -n1 sed -i '' 's/font-weight-/fw-/g'

Resources

Traversing the DOM

While working on the Bootstrap 5 migration, due to changes in how Bootstrap handles event listeners, several components' logic had to be modified to maintain the same behaviour. This was done by using selectors and methods to modify the DOM.

- Using selectors: recursively (

.find) or only on immediate children (.children) - Toggling classes on a Vue slot: by using selectors and

.toggle

One useful trick for CSS selectors is that you can right-click on an element in Chrome Devtools and copy its CSS selector!

Resources

Chrome Devtools

While testing and debugging, I found many features of Chrome Devtools to be useful:

- Copy the CSS selector of an element

- View all event listeners on an element

- Force a state on an element (e.g. hover, focus)

- View the properties of an element, which can be queried and/or modified with JavaScript

ONG JUN XIONG

Special characters encoding on URI

On windows, file paths are separated by the directory separator character which

is a \. This is different from the linux and mac's / separator. When

building tools to be used cross-platform, it is important to be able to parse

and encode file paths correctly. This is especially important when dealing with

URI since they only accept 84 characters with \ not being one of them. Hence,

if a URI contains a \ character, it will be encoded as %5C and may cause

issues with the path on a webpage. Read more about this

here.

HTML Canvas drawing

I used HTML canvas to help with image annotation. I choose to use HTML canvas since it provided the best performance and I felt it was well suited to the task of drawing arrows and annotating over images.

Below are some things that I found useful while working with HTML canvas.

The HTML <canvas> element is used to draw graphics, on the fly, via

JavaScript. The <canvas> element is only a container for graphics but the

actual drawing of the graphics is done using javascript by the user. I made use

of lines, boxes, text and image to help annotate over images. Once an image/line

is drawn, there is no way to erase that line unless the whole canvas is wiped

out (or draw a white box over that section). Due to this feature of canvas, I

had to parse all the image data and set the proper width, height and coordinates

directly before actually beginning to draw the canvas element. This made drawing

individual annotations harder since I had to account for the starting position

of each element and make sure that they are all in line.

I also found that resizing the canvas width or height will cause the whole canvas to be reset to a blank slate. This was one of the bigger issues I ran into since now I had to pre-calculate the final size of the canvas.

Resources:

DIVs vs SVG vs HTML Canvas for drawing

Advantages

DIVs are simple to work with and are the most common components for frontend developers. They are quite user friendly and are good for simple animations and drawings.

SVGs scale well and are a good choice for complex drawing. They are also relatively fast compared to DIVs and are computed in a way that there is no loss of quality at any scale.

Canvas is a good choice for lines, boxes and text drawing. It is the fastest of the 3 methods and can be used to draw over an existing image. This functionality makes it a good choice for annotating over any given image.

Disadvantages

DIVs did not offer the flexibility of SVG and HTML canvas, especially for drawing more complex shapes like arrowheads. Positioning of div elements also tends to be clunky as I would have to work with CSS positioning as well. This might be an issue when scaling is involved since relative position tends to change significantly depending on the size of the screen.

SVGs were complicated to overlay over an image and creating many different custom SVGs would require a use of a external library (or very complicated code). SVGs would also have to be referenced for each rended object and this would be troublesome since I am passing child components to the parent (making references difficult due to the similar names).

HTML canvas is complicated to work with and requires a lot of javascript. It also requires the image to be drawn in one go (depending on application) as often times drawing over a already drawn image does not preserve quality well.

Resources:

Final choice: DIVs for annotating over images

DIVs provided more flexibility since using CSS on them is simple compared to Canvas which is drawing over the image directly. Using components also allowed for the implementation of hover actions and was much simpler than the implementation in Canvas.

Attributes and Query Selector

The querySelector method is used to find elements in the DOM. I used it to get

the info of all child classes from the annotation-wrapper. This allowed me to

get the attribute data needed to build the html canvass before drawing. I choose

to use querySelector over emitters due to the way my components where

structured. By using querySelector adding more annotation features to the

class will be relatively easy since all I have to do is add another method to

query the new element id.

Resources:

Debounce - JS

In JavaScript, a debounce function makes sure that your code is only triggered once per user input.

Vue Element Selectors

This feature of Vue was really awesome. It allowed me to get elements from the

DOM without having to use the base javascript querySelector method.

Element selectors are used to find elements in the DOM. I used them to get the image of the parent element and from there managed to calculate the width and height of the image.

Element selectors are generally used in the created() section of vue but can

be used elsewhere too.

Example: this.$el.qureySelector('.image-wrapper')

CSS pointer redirection

Learnt that in CSS if you have 2 overlaying areas, and you want to ignore the

top area, you can use pointer-events: none; on the top level element and then

use pointer-events: all; on the bottom level element. This makes it so that

all pointer events in that area are redirected to the proper element.

Vue computed vs Vue method

Generally not much difference between computed and methods. However the key differences are:

Methods:

- Similar to JS functions that are used to perform actions on the data.

- Will only run when called

- Can take in parameters

Computed:

- Re-rendered every time the data changes

- Properties are available in data store

- Automatically cached

- Good for expensive operations

- Cannot send parameters

Resources:

RepoSense

CHAN JUN DA

FileSystems, Bash and CMD

Specifying File Paths in Command Line Arguments

General:

- File path arguments should nearly always be wrapped in quotation marks to accommodate file paths containing spaces.

For UNIX file systems:

/is the separator used by UNIX systems- UNIX file systems allows for virtually any character to be used in the filename except

/andnull(and some specific restricted names). As such, filenames and paths containing non-standard characters can lead to unexpected errors in program execution. It is important to be aware of such a possibility.- For example, in RepoSense, a local repository's path is first read as input and then used as a String in a CLI argument. A valid \ character in a filename will end up behaving as an escape character in the new CLI command.

For Windows file systems:

- \ is the separator for Windows file systems. However, it is also compatible with

/. All/read in a file path are replaced with \ as per Microsoft's documentation. - Java's implementation of the

Paths::getmethod performs Windows file path validation if run on a Windows system. However, if used in a test method run from UNIX, the behaviour will differ.

Relative and Absolute File Pathing Specifics

- Both

./and../function similarly in both UNIX and Windows (replacing/with \). ~is an absolute pathing feature used in Bash which expands to the user's home directory.- If tilde expansion is used as follows:

~chan-jd/"some file path"/then~chan-jdis expanded to the$HOMEdirectory of the userchan-jd. - If wrapped within quotation marks, it becomes a literal

~char in the file path. Thus, to use both together, the tilde has to be left out of the quotation marks:~/"some test file". ~does not work in Windows command prompt but does work in Windows PowerShell

- If tilde expansion is used as follows:

- Windows has various methods to apply the current directory to a path

- If a file path starts with a single component separator like

\utilitiesand the current directory isC:\tempthen normalization producesC:\utilities - If it starts with a drive without component separator like

D:sources, it looks for the most recent directory accessed onD:\. If it isD:\tempthen the normalization producesD:\temp\sources. - Otherwise, a path like

example\pathwill be prepended with the current directory on normalization.

- If a file path starts with a single component separator like

Special Characters in Bash String Arguments

- Double quoting an argument will still allow for special characters like

$(variable substitution) to work. - Single quoting preserves the literal value of every single character within the quotes. Another single quote will end the quotation and there is no way to escape it.

- Reference manual link

- This stackoverflow post suggests a good way to handle quoting arguments containing both single quotes and special characters.

- Wrap all characters besides single quotes in

'. - For

', replace it with'"'"'. Consecutive quoted strings not separated by spaces are treated as a single argument in Bash. This quotes the single quote in double quotes where it does not have special meaning.

- Wrap all characters besides single quotes in

- A (possibly incomplete) list of special characters in Bash that need to be escaped can be found in this stackexchange post.

- Relevant note: On Windows CMD, the

'single quote has no special meaning and cannot be used to quote arguments in CMD. Only double quotes works for arguments containing spaces to be treated as a single argument.

Variable and tilde expansion in CMD and Bash

When we run something like java -jar RepoSense.jar some cli args, variable expansion and tilde expansion (for Bash) is performed for us before the Java program receives it.

- E.g. If I specify my repository as

~/reposense/Reposense, themainmethod in the Java program will receive the String/chan-jd/home/reposense/Reposensein itsargsarray. - This behaviour is mostly beneficial for us but can cause some non-uniform program behaviour when user has more than one way to specify their arguments. An example relevant to RepoSense is that users can specify their local repository file paths in both the command line or the

repo-config.csvfile. But when RepoSense reads the data straight from the CSV file, it does not perform the necessary expansions. Using the example above,RepoSensewill receive the raw version of the String,~/reposense/Reposenseinstead which might cause some issues. - One possible way to work around this is to

echothe command in CMD or Bash. The command output will include the substituted expansions.

GitHub

Deployments and Environments

- Environments can be viewed as the platform for which deployments are staged. There are generally fewer of them. For example in RepoSense, there is roughly two environments per active pull request for deployments.

- Environments can be viewed on the main page of a repository.

- They will linger so long as the deployment on the environment continues to exist and would normally require manual deletion.

- Deployments are requests to deploy a specific version of the repo such as a pending pull request. In the context of RepoSense, a single PR can have several tens of deployments if it is consistently updated.

- It is generally difficult to track and control deployments on the GitHub page itself.

- However, through the GitHub API, we can query all deployments relating to an environment and delete them. This will automatically remove the environment from the listing as well. This solution was taken from this stackoverflow post.

GitHub API

The GitHub API provides us with a way to interact with repositories via a RESTful API. Using curl commands:

- We are able to query (via

GET) for information such as branches, deployments and environments - We are also able to

POSTcommands to a repository to perform various actions such as deleting deployments. These generally require a security token which might not be available from a personal computer's CLI. When running GitHub Actions, it is possible to acquire one for the repository to perform actions such as closing deployments.

GitHub Actions

We must add GitHub action workflow files to the directory .github/workflows in order to automatically perform certain scripts on certain GitHub actions.

- The general workflow of a

.ymlworkflow file contains a declarationonwhich states under what scenarios will these actions be triggered- It is followed by a list of jobs. For each job, we can declare a name, platform to run on, environment variables, followed by sequential steps to perform.

- Under steps, we can use

runto run shell scripts similar to running the same command from a BASH terminal

- When setting up an environment to perform a specific workflow, we can generally choose exactly what OS to run, what versions of Java or other languages/packages to use.

- It is additionally helpful to be aware of what are the versions used by default for the various OS-es.

- Ubuntu 20.04 - here we can see the default Git used is 2.30.2. It might be helpful to be aware of such specifications as we might experience differing behavior in the future due to version differences.

- Related to RepoSense, and caused me quite a bit of trouble is that GitHub Actions uses the default timezone of UTC+00.00 which leads to some commits being assigned to the previous day as compared to if it were run locally on my own machine which is at UTC+8.

- It is additionally helpful to be aware of what are the versions used by default for the various OS-es.

Git Specifications:

Git Diff Output

The git diff command is used heavily by RepoSense for analyzing. The following are behaviour in the output that I discovered from self-testing as documentation about the behaviour was difficult to find:

The filename

/dev/nullrepresents a non-existent file. A mapping from/dev/nullto a file indicates a new file. Similarly, a mapping from a file to/dev/nullindicates a deleted file.For filenames containing certain special characters (i.e. the lines

--- a/some/file/nameor+++ b/some/file/name), the listing of filenames in the before-after comparison is adjusted slightly.- For files containing spaces, the filename will have a tab character at the end. E.g.

+++ b/space test\t. - For files containing special characters (not including space) such as \,

",\t, the filename will be placed in quotation marks. E.g.+++ "b/tab\\ttest/" - For files containing both of the above cases, the filename is first wrapped in double quotation marks followed by a tab character.

- For files containing spaces, the filename will have a tab character at the end. E.g.

These nuances in

git difffilename output may be important for filename designation as is done in RepoSense.

Git Log Output

Every commit in the log output displays the hash, author with email, date and message.

- If a user has set an email in their

git configbut set their Github 'keep my email address private' setting to true, web-based Git operations will list their email asusername@users.noreply.github.com. - It is possible to explicitly set the email to be empty through

git config --global user.email \<\>which will set it to<>. No email will show up in a commit done by such a user config.

Git Commit & Config Interaction

git commit commit information details can be found here.

- When committing with a

user.nameanduser.emailthat matches a GitHub account, commits on GitHub will be able to link the GitHub account to the commit.- However, committing with an empty email

<>will cause the commit to look like this on GitHub where there is no account linked to it.

- However, committing with an empty email

- It is possible temporarily set

user.nameas<>. However, it will not allow the user to commit, citing an invalid username.- It is also possible to set

user.nameas an empty string which is equivalent to resetting it. Git will not allow commits until auser.namehas been set.

- It is also possible to set

Git Changelogs

This section refers specifically to the changes to the Git tool itself. I have found out from my own experience that finding information relating to Git versions can be difficult as most search results relate to how Git can be used to manage those versions.

- The release notes can be found at https://github.com/git/git/tree/master/Documentation/RelNotes

- One (rather inefficient) way I have found to attempt to search for relevant information regarding when a specific change was made was to do the following:

- Clone the

gitrepository locally (Note the repository is quite large) - Create a bash script that takes in a string from the command line and

grep-es it against all the text files in the folderDocumentation/RelNotes

- Queries are generally quite fast. For example, if I wanted to find out when the

git blame --ignore-revs-fileflag was added, I could search forgit blameand see all relevant release notes and look them up manually. grepcan be set to quiet mode if I'm just looking for the file containing the reference. Otherwise, in non-quiet mode, the line in which the string match is found is printed. I can read the line and see if it is directly related to what I am looking for without having to look-up the file myself.

- Clone the

- Descriptions of the changes can be somewhat vague. It is usually easier to look for the specific command and see if it showed up in the specific release notes rather than trying to find keywords relating to the change in mind.

- For example the

ignore-revs-listflag addition was done in 2.23.0. The release notes reads"git blame" learned to "ignore" commits in the history, .... - For full details on the change, we need to go to the commit message itself. The commit messages are extremely detailed, e.g.

git blame --ignore-revs-filecommit can be found here.

- For example the

Javascript/Vue.js

New language for me, no prior experience. Learnt how to read and interpret most of the lines of Javascript in RepoSense.

- Javascript has

Objects which are a container of key-value pairs listing its properties, and some pre-defined methods.- Can perform operations on

Objects similar to what can be done to a map. UseObject.entries(object)to enumerate the key-value pairs. - The properties of an object can be created, edited and accessed from anywhere more similar to languages like Python's OOP

- Can perform operations on

- Javascript uses

(args...) => outputto write lambda functions as opposed to(args...) -> outputfor Java. - Javascript has something known as object destructuring where we can extract the properties of an object.

- Given something like

author = {name: JD, repo: RepoSense}, then doingconst {name, repo} = authorwould allow us to access thenameandrepoas local variables.

- Given something like

Interacting with objects on a webpage:

- Each click on an interactive component on the webpage fires off a

clickEvent.- In Vue, we can tag listener methods to a specific event a component might encounter.

- The event itself also contains details about the circumstances in which it was triggered such as other buttons that were pressed while the

clickEventwas created. - The default behaviour for clicking on an anchor link object is to open the hyperlink reference. This can be prevented with a listener that does

event.preventDefault().

HTML

hrefattribute of an anchor object provides the hyperlink reference to whichever URL page the anchor object is supposed to open.- Notably, if

hrefis not set and left asundefined, then a link will not be opened even iftargetis set.

- Notably, if

- In conjunction with the

hrefproperty, there is thetargetproperty which designates where the hyperlink will be opened.- Most commonly used in RepoSense is

target="_blank"which means to open a new window or tab. - There are other alternatives such as

_selffor opening in the same frame,_parentand_top.

- Most commonly used in RepoSense is

Regular Expressions

Java provides extensive support for Regex matching via the Pattern and Matcher classes which facilitate string parsing.

Patternis Java's class for handling various regex pattern.- The API provides a list of all the recognised syntax. In particular, I would like to go over the different quantifier types as I believe they are quite important for anyone who wants to build complex regex patterns.

Greedy quantifiers- what we see the most often:x?,x*,x+.- These will always try to be met if possible. They take precedence over

Reluctant quantifiers.

- These will always try to be met if possible. They take precedence over

Reluctant quantifiers- e.g.x??,x*?,x+?.- These matched only if it is required for the regex to fully match.

- For example, matching

(?<greedy>.*)(?<reluctant>.*)on the stringExample wordwill result ingreedy = Example wordandreluctant = "";

Possessive quantifiers- e.g.x?+,x*+,x++.- When Java does the matching from left to right, these quantifiers will be matched first. Once Java reaches the end of the line, it actually does backtracking to see if some greedy/reluctant quantifiers can give up some characters for the regex to match.

- However, possessive quantifiers will never give up their characters on backtrack even if it means that the matcher would otherwise have matched.

- Possessive quantifiers should be used carefully. However, their behaviour is more straightforward and easy to understand.

Matcher- We can perform

Pattern p = ...; p.matcher("some String")to obtain aMatcherobject. Most of the information that we want can be obtained from this object. - However, there are some points to be noted about the implementation of the

Matcherobject in Java.Matcherobjects (for Java 8 and 11) are initially just a mutable container containing the regex and the String. Matching logic has not yet been performed.- Suppose we have named groups within our regex pattern. We have to run a

matchesorfindmethod at least once for the object to mutate and support group queries.- Calling a

Matcher::groupdirectly without first callingmatchesorfindleads to anIllegalStateExceptionas the object never attempted to match anything yet.

- Calling a

- We can perform

Regex testing can be particularly cumbersome, slow and difficult to grasp why the regex is behaving the way it is. I personally found this website regex101 which allows convenient testing of various regex patterns on different inputs. It also supports regex testing for different languages.

GSON package

Gson (also known as Google Gson) is an open-source Java library to serialize and deserialize Java objects to (and from) JSON. (Taken from Wikipedia's description)

- I used this baeldung.com guide to learn about converting JSON objects into HashMaps. They also have various other articles describing other features like deserialization to greater depth. Their API can also be easily found online. This is their 2.9.0 API.

- From my experience, the general way in which GSON reads a JSON file is to read it into a

JsonElementabstract class with four different types, aJsonArray,JsonNull,JsonObjectandJsonPrimitive.- Most of the types listed above are very similar to their Java counterparts. The one that stands out is the

JsonObjectwhich is more similar to the Javascript object as it is a collection of name-value pairs where each name is a string and each value is anotherJsonElement.

- Most of the types listed above are very similar to their Java counterparts. The one that stands out is the

- In order to read JSON files into more exotic objects that we might have previously defined, we can either use a

TypeTokenfor the GSONJsonParseror implement our ownJsonDeserializerfor each class we want to be read from a JSON file.- One naive way of parsing a JSON file into a Map is to do

Map map = new Gson().fromJson(JSON_FILE, Map.class);. However, this immediately runs into the problem where the map class itself is using raw types.To avoid this, we can use a

TypeToken. By creating a new type as suchType mapType = new TypeToken<Map<String, Commit>>() {}.getType(); Map<String, Commit> map = new Gson().fromJson(JSON_FILE, mapType);We are able to preserve the generics class types used.

- One naive way of parsing a JSON file into a Map is to do

Others

"file" URI Scheme

- Specification can be found here RFC8089.

- A file URL looks like

file://host.example.com/path/to/file.- Double slashes following the colon

://indicates that the file is not local andhost.example.comis the 'authority' which hosts the file - Single slash or triple slashes after the colon

:/or:///will both be treated as a local file. Everything after the last slash forms the path to the file.

- Double slashes following the colon

- A file URL looks like

cURL command

cURL is a command-line tool to transfer data to or from a server using support protocols. In particular, GitHub Actions shows many examples of RESTful API calls using the curl command.

RESTful API

- REST stands for Representational state transfer. It is an architectural style for an API that uses HTTP requests to access and use data. (description taken from here).

- GitHub has its own RESTful API where we can make queries to repositories and possibly post commands to a repo provided we have proper access rights. The return type for queries is normally in the JSON format.

Bash Shell Scripting

- Can use

.shfiles to write shell scripts that can be run in Linux environments - Can specify functions as

function_name() { ... }- Functional arguments can be referenced from within the function declaration block with

${1}referring to the first argument,${2}referring to the second argument, etc. - These functional arguments can be specified by a user via

function_name "$argument1" "$argument2" $@can also be used to refer to all of the shell script's CLI arguments. It can be iterated through like an array.

- Functional arguments can be referenced from within the function declaration block with

Other popular remote repository hosts

- Any remote site that allows access to a

.gitdirectory is capable of hosting a remote repository - Popular remote repository domains besides GitHub include sites like GitLab and BitBucket

- Interacting with these are nearly identical to interacting with GitHub, with

httpsandsshoptions to clone a repository. - Relevant to RepoSense: the paths to commits and other features are usually different between the sites. For RepoSense to support all these websites, it'll have to take into account the differences in the path to these resources on the website.

- Interacting with these are nearly identical to interacting with GitHub, with

IntelliJ Inspect Code

The Inspect Code tool allows IntelliJ to look for common code quality issues such as

- Declaration redundancy

- Probable bugs

- Proofreading

Limitations:

- A full run of the code cleanup takes a long time to complete. A run on RepoSense itself takes ~10 minutes. This can be cut significantly shorter if we ignore the proofreading checks which cuts it down to 30 seconds.

- 'Unused' (as declared by IntelliJ) fields might not be redundant such as in

SummaryJson.javawhere the fields are necessary for conversion to a JSON file. - 'Unused' methods are sometimes necessary for other tools such as JavaBeans (requires setters and getters) and JavaFX (@FXML methods are not detected as 'used')

Thus, we should still exercise discretion in using this tool even if it is something as simple as removing unused variables or methods.

File locks

When Java opens a file for writing, it obtains a file lock in the form of filename.extension.lck with lck standing for lock.

This serves to support mutual exclusion for access to writing to the file. This appears in RepoSense when loggers attempt to write to the log file in which case, some kind of mutual exclusion gurantee is required.

- Notably, file locks (and other process resources) are released automatically when the main Java process

exits().- However, in some scenarios, releasing the lock only at the end of the entire process might be too late. One example I encountered is that when running

gradlewsystem tests, thelogfile lock is held on to for the entire duration of the run over multiple system tests. This causes issues with running consecutive system tests as I am unable to delete the previous system test's report due to the lingering file lock.

- However, in some scenarios, releasing the lock only at the end of the entire process might be too late. One example I encountered is that when running

- In some scenarios when the program does not exit properly, the

.lckfile might be left behind.- An example execution that results in this is running RepoSense via

gradlewcommand line with the-vcommand. Afterctrl+Cto close the server, the.lckfile persists even though it should have been cleaned up. For some reason, runninggradlewon Ubuntu 18.04 does not have the same issue.- However, this seems to be mostly harmless as it does not affect anything else.

- An example execution that results in this is running RepoSense via

- Suggested by this stackoverflow post, one way to easily release all log resources at the end of Java execution is to use

LogManager.getLogManager().reset()which immediately releases all resources.

Checkstyle

Their GitHub repository can be found here where we can view the features they are working on and bugs that other people are experiencing.

- In particular, there is a rather strange bug relating to

forceStrictConditionwhich is not able to properly detect parent lines of nested line wrapppings.- The relevant issues can be found in Issue #6024 and Issue #6020

- The above issues result in some somewhat strange enforcements, for example (taken from #6024), the code below has violations though it is what we expect the indentations to be

Arrays.asList(true,

Arrays.asList(true,

true, //violation

true)); //violation

- While the same line of code below is what passes the checkstyle but has unusual indentation.

Arrays.asList(true,

Arrays.asList(true,

true, // no violation, but should be

true)); // no violation, but should be

- There appears to be quite a distinct tradeoff here as without

forceStrictCondition, checkstyle only enforces the minimum required indentation level. A user's indentation can be as large as they desire so long as it does not exceed the line character limit.- However, if we were to

forceStrictCondition, then for nested line wrappings, the indentation being enforced can be somewhat strange.

- However, if we were to

Gokul Rajiv

Gradle

Gradle is a very flexible build automation tool used for everything from testing and formatting, to builds and deployments. Unlike with other build automation tools like Maven where build scripts written in XML (a widely hated feature of the tool), Gradle build scripts are written in a domain specific language based on Groovy or Kotlin, which are both JVM based languages. This means that it can interact seamlessly with Java libraries.

Gradle is also much more performant than alternatives like Maven because of its:

- Build caching: Only reruns tasks whose inputs have been changed.

- Gradle daemon: A background process that stores information about the project in memory so that startup time can be cut down during builds.

RepoSense recently added functionality for hot reload on frontend as a Gradle task, which made frontend development a lot more productive. Unfortunately, the feature isn't available on Linux because the package we were using (Apache Ant's condition package) could not specifically check for it. Migrating to Gradle's own platform package recently taken out of incubation, allowed us to support all 3 prominent operating systems.

References:

- https://docs.gradle.org/current/userguide/userguide.html

- https://docs.gradle.org/current/userguide/gradle_daemon.html#sec:why_the_daemon

- https://docs.gradle.org/current/javadoc/index.html?overview-summary.html

- https://docs.gradle.org/current/javadoc/org/gradle/nativeplatform/platform/package-summary.html

- https://ant.apache.org/manual/api/org/apache/tools/ant/taskdefs/condition/package-summary.html

- https://stackoverflow.com/a/31443955

GitHub Actions and API

Like Gradle, Github Actions help with automation of workflows like CI/CD and project management, and can be triggered by a variety of events (pull requests, issues, releases, forks, etc). It also has a growing library of plugins that make workflows a lot easier to set-up. I was surprised that there is some nice tooling support for GitHub actions on IntelliJ.

GitHub actions allows users to run CI on a variety of operating systems, such as Ubuntu XX.04, macOS and Windows Server (which is virtually the same as Windows 10/11 but with better hardware support and more stringent security features).

GitHub also provides a variety of API to interact with these objects. One quirk I came across with the API was that posting single comments on pull requests need to go through the issues endpoint instead of the pulls endpoint (the endpoint for pulls requires code to be referenced). This doesn't cause problems since issues and pulls will never have identical IDs.

GitHub deployment APIs also returns deployment information in pages, which is a sensible thing to do but can cause slight inconvenience when long running PRs have more deployments than can fit in a page.

Actions and APIs also have some great documentation:

- https://docs.github.com/en/rest/guides/getting-started-with-the-rest-api

- https://docs.github.com/en/actions/using-workflows/workflow-syntax-for-github-actions

- https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows

Git Remotes

Git exploded in popularity in large part due to Git hosting providers like GitHub. GitLab and Bitbucket are also commonly used Git hosts. RepoSense has thus far only largely supported GitHub, but there is a clear incentive to support other commonly used remotes. This is made a little challenging due to differences in conventions between the sites:

base_url | Commit View | Blame View | |

|---|---|---|---|

| GitHub | github.com | {base_url}/{org}/{repo_name}/commit/{commit_hash} | {base_url}/{org}/{repo_name}/blame/{branch}/{file_path} |

| GitLab | gitlab.com | {base_url}/{org}/{repo_name}/-/commit/{commit_hash} | {base_url}/{org}/{repo_name}/-/blame/{branch}/{file_path} |

| Bitbucket | bitbucket.org | {base_url}/{org}/{repo_name}/commits/{commit_hash} | {base_url}/{org}/{repo_name}/annotate/{branch}/{file_path} |

For example, Bitbucket uses the term 'annotate' instead of 'blame' because the word 'blame' is insufficiently positive.

Triangular Git workflows

In investigating the output of git remote -v, I noticed there were 2 remotes (fetch and push) for each remote name, which I was confused by. The utility of the separation of fetch and push remotes is for triangular workflows.

We are probably familiar with the common workflow for updating a branch on a forked repo which involves first pulling updates from upstream master, then making changes and pushing to our own fork. This requires remembering to fetch and push to separate repos. With triangular workflows, you can have fetch and push apply to separate repos but with the same remote name, which makes this process much more convenient.

Cypress Tests

Cypress is a frontend testing tool for testing applications that run in the browser, with tests that are easy to read and write. It uses browser automation (similar to Selenium) and comes with a browser and relevant dependencies out of the box, so it's very easy to set-up. Cypress also provides a dashboard for convenient monitoring of test runs.

https://docs.cypress.io/guides/overview/why-cypress#In-a-nutshell

Bash Scripting

Bash scripts can be run in a github action workflow, which greatly expands the scope of things you can do with actions. Bash is quite expressive (I hadn't realised just how much it could do). some cool things I learned you could do:

- {$VAR,,} to lowercase string in $VAR.

$*gives parameter values separated by the value inIFS(Internal File Separator).- Pipe output into

python3with a-cflag and perform more complex processing with a single line python program. - Standard output and error can be redirected separately (e.g.

ls 1> out 2> err)

Vue

Being relatively new to frontend tools, I found Vue.js to be quite interesting. Vue allows code reusability and abstractions through components. While I didn’t work extensively on the frontend, what I learned from the bits that I did work on was quite cool:

Vue state: I found it interesting that you could have computed properties that are accessed the same way as properties, but can depend on other properties and can dynamically update when these properties change. This is often more elegant than using a Vue watcher to update a field. You can even have computed setters that update dependent properties when set. A watcher, however, can be more appropriate when responses to changing data are expensive or need to be done asynchronously.

Vue custom directives: Directives are ways to reuse lower level DOM logic. Directives can define vue life-cycle hooks and later be bound to components (can actually take in any JavaScript object literal). For implementing lazy loads, I needed to use the vue-observe-visibility (external library) directive with slight modification to the hooks to be compatible with Vue3.

References:

- https://v2.vuejs.org/v2/guide/computed.html

- https://vuejs.org/guide/reusability/custom-directives.html

Pug

Pug is a templating language that compiles to HTML. It is less verbose and much more maintainable than HTML, and also allows basic presentation logic with conditionals, loops and case statements.

JavaScript Quirks

There are a lot of these, and most just remain quirks but some result in actual unintended bugs in production (often in edge cases). It was interesting to see this in our contribution bar logic. A technique sometimes used to extract the integer part of a number is to use parseInt (it's even suggested in a Stack Overflow answer). It turns out we were using this for calculating the number of contribution bars to display for a user. This works for most values, but breaks when numbers become very large or small (less than 10^-7). In this unlikely situation, we'd display anywhere from 1 to 9 extra bars (moral: use Math.floor instead!).

Browser Engines

An investigation into string representations in browsers led me down a rabbit hole of JavaScript runtimes and engines, and ultimately improved my understanding of JavaScript in general. Different browsers have different JS engines - Chrome uses V8, Firefox uses SpiderMonkey (the original JS engine written by Brendan Eich), Edge used to use Chakra but now also uses V8, Safari uses WebKit, etc. Engines often differ significantly in terms of the pipeline for code execution, garbage collection, and more.

The V8 engine as an example, first parses JavaScript into an Abstract Syntax Tree (AST) which is then compiled into bytecode. This bytecode is interpreted by the Ignition interpreter (Ignition also handles compilation of the AST into bytecode). Code that is revisited often during interpretation is marked "hot" and compiled further into highly efficient machine code. This technique of optimising compilation based on run-time profiling (Just-In-Time (JIT) compilation) is also used in other browser engines like SpiderMonkey and the JVM.

The engine is used for running things that are on the browser stack. JS is run in a single thread, and asynchronous tasks are done through callbacks in a task queue. The main script is first run, with things like promises and timeouts inserting tasks into a task queue. Tasks (and microtasks which are more urgent, lower overhead tasks that can execute when the call stack is empty even while the main script is running) in a task queue wait for the stack to be empty before being executed. Page re-renders are also blocked by running code on the stack (long delays between re-renders are undesirable). Using callbacks and hence not blocking the task queue, allows re-rendering to be done more regularly, improving responsiveness. The precise behaviour of task de-queueing (and lower overhead microtasks) can actually differ between browsers, which causes considerable headache.

References:

- https://cabulous.medium.com/how-v8-javascript-engine-works-5393832d80a7

- https://jakearchibald.com/2015/tasks-microtasks-queues-and-schedules/

- https://www.youtube.com/watch?v=8aGhZQkoFbQ

General Software Engineering/Design Considerations

Discussions over PRs, issues and generally attempting to solve issues, were a great way to explore design considerations. Here is a non-exhaustive list of interesting points that came up this semester:

In-house vs External Library

In implementing new functionality or extending existing functionality (Git interface for example), there is usually a question of whether it would be easier to maintain features in-house, or use external libraries. It might be a good idea to maintain core functionality in-house since we'd want more fine-grained control over these features and new features can be added/fixed quickly as needed. At the same time, external libraries save time and cost of learning about and solving possibly complex problems.

External libraries can however introduce vulnerabilities (several incidences of dependency sabotage with npm packages like color.js and node-ipc hit fairly close to home over the course of the semester). Hence, selection of libraries should be a well-deliberated process and should include considerations like active-ness of the project and diversity of maintainers.

Recency vs Ubiquity

In maintaining versions of dependencies, it is often important to weigh upgrading to a new version to get the newest features against possibly alienating users who don't already have that version. Neither is necessarily better than the other and will likely depend on the nature of the product. A new product for developers would probably have users who want new versions with the bleeding edge of features. On the other hand products that already have a large user base and aimed at less technical users might want to favour ubiquitous versions. Since RepoSense is aimed at users of all skill levels, including novice developers, we often default to the later approach.

In a similar vein, it might be important to make sure that new features don't break backward compatibility so that the end-user won't face significant hindrances with making upgrades. At the same time, the need to be backwards compatible can be a root of evil, introducing all manners of hacks and fixes. This highlights the importance of foresight in the early stages of development. Also, deciding when to stop backwards compatibility with a significant version bump can be a non-trivial decision. Doing so should come with thorough migration documentation (sparse documentation for Vue2 -> Vue3 migration caused a lot of developer grievances).

Isolated Testing

While it's fairly obvious that modularity with tests is important and that components should be tested in isolation with unchanging inputs, it is easy to let slip lapses in the form of hidden dependencies that prevent components from being isolated, or having inputs that are actually non-static. Some of these issues came up over the course of the semester but it struck me just how easy it was for them to slip by unnoticed. There aren't necessarily language-level features that enforce coupling rules for the most part since many of these dependencies can be quite implicit.

This had me thinking about the importance of being explicit in crucial sections of code, as described below.

Being Explicit

It is important that programmers make the behaviour of certain crucial sections of code as explicit as possible. One way of doing this is through good naming of methods and variables, and grouping statements semantically into methods or classes. Large chunks of code is detrimental and allows implicit slips in behaviour that can go unnoticed. So we might even want to make new special classes that do very specific things to make it clear that it is an important subroutine/behaviour that deserves its own abstraction.

At the same time, high reliance on object orientation can lead to too many classes, each class doing trivial things and with high coupling between the classes leading to spaghetti logic that doesn't do very much to alleviate implicit behaviour. There exists a delicate middle ground characterised by semantically well partitioned code.

Behavioural Consistency

The earlier section on Javascript quirks were a result of an overly accommodating feature integration during the early stages of development. It's become a cautionary tale of sorts of the importance of consistency and predictability in behaviour. In adding new features, it was personally very tempting to allow small inconsistencies in behaviour in favour of simplicity of implementation. While simplicity is a desirable outcome, I'd argue that consistency is more important (small inconsistencies can runaway into larger un-fixable differences).

Consistency can be with respect to various things. For example, we might want that identical inputs behave the same under similar conditions (differing in non-semantic respects) or that similar inputs (differing in non-semantic respects) behave the same under the identical conditions, etc.

Miscellaneous helpful tools

- The command line tool GitHub cli provides a very handy way to access GitHub API, and has been useful for checking out PRs, interacting with issues, managing workflows, etc right from the command line.

git bisectis a very nice way to find problematic commits. Given a bad commit and a previously good commit,git bisectdoes a binary search (either automatically with a test or with manual intervention) to find the problematic commit where the issue was introduced.- Search through previously run commands (with the first few characters) with ctrl-r in a bash shell.